Lecture from: 19.09.2024 | Video: Videos ETHZ | Rui Zhangs Notes | Official Script

Algorithms are essential in computer science, especially when dealing with large data sets or solving complex problems. The goal of an algorithm is not just to solve a problem but to do so efficiently—minimizing the number of operations, execution time, and memory usage.

What is an Algorithm?

An algorithm is a step-by-step procedure for solving a problem. It breaks down a complex problem into smaller, simpler tasks that can be solved individually.

Computers can only perform basic operations—like addition or comparison—at incredible speed. When faced with a problem, we often consider multiple solutions and evaluate different algorithms based on their performance metrics: the number of operations, execution time, memory usage, etc.

Multiplication Algorithm

Let’s take an example we all know: the algorithm for multiplying two numbers using the traditional school method.

School Multiplication Algorithm

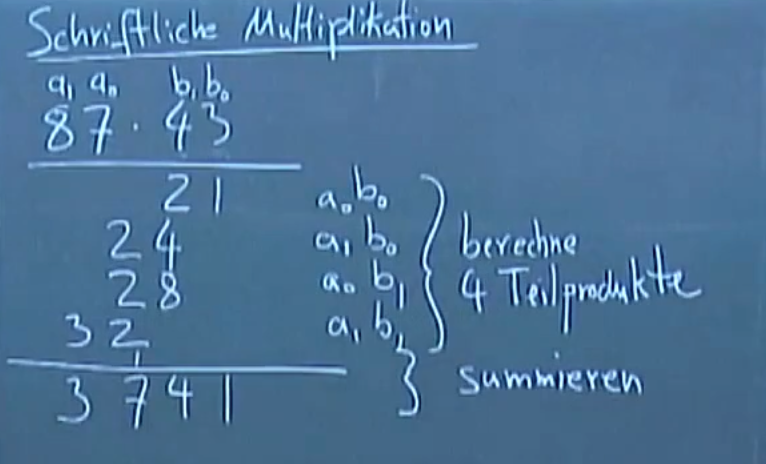

Example: Multiplying Two-Digit Numbers

Take two numbers, say 23 and 47. Here’s the basic process we learned in school:

- Multiply the digits in the units place ().

- Multiply digits from the tens and units places, including zero padding ( and ).

- Multiply the digits in the tens place ().

- Sum up the results to get the final product.

This process essentially involves computing four partial products and then summing them up. Adding numbers is typically easier than multiplying them.

Example of 87 x 43:

Correctness of the School Method

How do we know that this algorithm is correct? Let’s break down the math behind it. Consider the two-digit numbers:

Expanding this using the distributive property:

This matches exactly with the operations we performed in the school method: the multiplication of the individual digits, followed by adding the correct powers of 10.

For n-digit numbers, the process is similar. We multiply each pair of digits and sum the results, adjusting for powers of 10 as needed.

Number of Operations in the School Method

If we multiply two n-digit numbers using this method, we perform single-digit multiplications and a few additions. The total number of operations scales with —this means that multiplying two n-digit numbers takes approximately basic operations.

For example, multiplying two 1000-digit numbers would require about a million operations. While this approach is correct, it’s not the most efficient for large numbers.

Can We Do Better? (Geht es besser?)

Clearly, becomes inefficient for large numbers, so mathematicians have explored algorithms that improve the speed of multiplication. One well-known improvement is Karatsuba’s algorithm, which reduces the complexity of multiplication to , making it much faster for large numbers.

Karatsuba’s Algorithm

Karatsuba’s Algorithm is a more efficient method for multiplying large numbers than the traditional “school method.” It reduces the number of multiplications needed, improving the algorithm’s time complexity from to .

The key insight in Karatsuba’s Algorithm is to break down the multiplication of two n-digit numbers into smaller sub-problems. Instead of directly multiplying the numbers digit by digit, the algorithm divides each number into two parts, performs fewer multiplications, and then combines the results.

Recursive Algorithm Concept

At its core, Karatsuba’s Algorithm utilizes a recursive approach. Recursive algorithms solve a problem by breaking it down into smaller instances of the same problem. In this case, multiplying two n-digit numbers is transformed into multiplying two smaller numbers (each with approximately half the number of digits).

This concept can be summarized as follows:

-

Base Case: For small values of n (typically when n = 1), the algorithm performs direct multiplication, as it’s straightforward and efficient for single-digit numbers.

-

Recursive Step: For larger n, the algorithm splits each number into two halves. The multiplication is then expressed in terms of products of these halves, significantly reducing the number of multiplication operations required.

This recursive structure allows the algorithm to handle very large numbers efficiently. Each level of recursion handles a problem of smaller size until the base case is reached. As a result, this approach not only simplifies the calculations but also leverages the power of recursion to improve overall efficiency.

Algorithm Breakdown

Let’s multiply two n-digit numbers, and . We can split and into two halves:

The product can then be expressed as:

Expanding this gives:

Karatsuba’s insight is to compute the middle term, , more efficiently by using the following identity:

Thus, instead of performing four multiplications (, , , and ), Karatsuba’s method reduces this to just three multiplications:

Then, the final result can be computed by combining these three products.

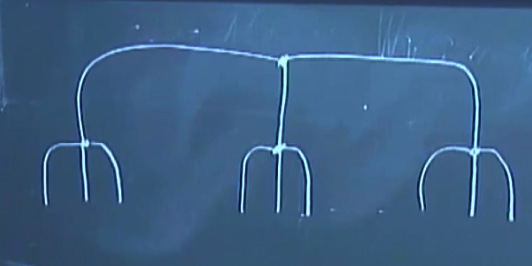

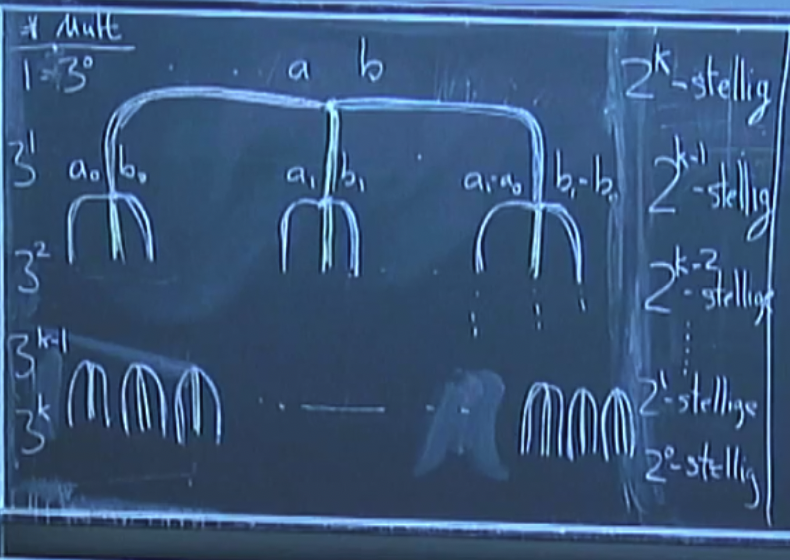

The recursiveness of this algorithm can be expressed as a tree:

Efficiency

In order to calculate the number of operations for n-digit numbers let us assume that the n-digit numbers have digits (and otherwise simply prepend 0s).

Using the recursive approach that divides multiplication into three smaller multiplications, we can analyze the number of single-digit multiplications required for multiplying two numbers of digits.

Using the recursive approach which divides a multiplication into three smaller multiplications. We break the multiplication of two -digit numbers into three recursive multiplications of size :

- Compute

- Compute

- Compute

We can see that the multiplication of two digit number needs single-digit multiplications. Each of these multiplications is performed on numbers that have half the number of digits, leading to a recurrence relation for the time complexity:

Here, the term accounts for the linear time needed to perform the additions and subtractions required to calculate and to combine the results.

To determine the time complexity from the recurrence, we can apply the Master Theorem. We compare with :

- (the number of subproblems),

- (the factor by which the size of the problem is reduced),

- .

Calculating gives approximately .

According to the Master Theorem, since grows slower than , we fall into the first case:

The school method needs . For the Karatsuba’s algorithm requires 10x less operations, for 100x less.

Karatsuba’s algorithm reduces the number of multiplications from to . This makes it significantly faster for large numbers than the “school” algorithm.

Summary of Efficiency

For two numbers with digits:

- Karatsuba’s Algorithm: Requires single-digit multiplications, leading to a complexity of .

- School Method: Requires single-digit multiplications, leading to a complexity of .

Thus, when comparing the two algorithms:

- For , Karatsuba’s algorithm requires approximately 10 times fewer operations than the school method.

- For , it requires around 100 times fewer operations.

Overall, Karatsuba’s algorithm is significantly faster for large numbers, improving the multiplication efficiency by reducing the number of operations required.

Wall Following Algorithm (Pasture Break)

For this problem, our aim is to find a location along a 1D line by moving ourselves to it, with as few steps as possible.

Here a reason why you’d need this:

In a distant land, you find yourself trapped in an infinite, vast, circular arena, drawn here by an ancient prophecy. Legends say this place tests those who dare to enter, but you had no choice. Towering stone walls surround you, cold and endless. Though you’re not blindfolded, the arena is shrouded in darkness and thick fog, limiting your vision to just a few feet ahead. The air is damp, and every sound feels muted by the heavy mist.

The prophecy speaks of a single hidden passage along the arena’s perimeter, the only escape. You place your hands on the wall, feeling its rough, cold surface as you move carefully, searching for any change that might reveal the way out.

Wandering without a plan would be foolish. The prophecy offers a clue: The way is simple, though the path is unclear. Let your hands guide you.

(This is an Alternate Intro To “Pasture Break” or “the Wall Following Problem in 1D”)

In this problem our aim is to find the exit in as few steps as possible.

Algorithm 0 (Distance Given)

For this algorithm let us assume that someone has engraved into the wall infront of you that the exit is “k steps away”, however you don’t know which direction to go in. The most straightforward way to find the exit is to go k steps in the one direction, and if the exit isn’t there, then k steps back and k steps in the opposite direction.

- Best Case: k steps

- Worst Case: k steps + k steps back + k steps in the right direction = 3k steps

Algorithm 1 (Naive)

Now realistically, you won’t have any engraving telling you how far, nor in which direction that the exit is. You have to find this out on your own. The most simple algorithm would be:

- 1 left, back to start

- 2 right, back to start

- 3 left, back to start

- …

- k-1 right, back to start (we just missed the exit)

- k left, back to start

- k+1 right, but we stop after k, since we found the exit.

Now one of the ways to evaluate algorithms is to look at their worst case. So let us count how many steps we are doing.

We’ll compare this with the other algorithms later. Before that, let us try to think of a more clever algorithm.

Algorithm 2

The algorithm is:

- steps left, back to start

- steps right, back to start

- steps left, back to start

- …

- steps right, back to start

- steps left, back to start

- k (where )

Comparison of Algorithms

In order to compare these algorithms we can’t have a mix of variables describing the steps required (worst case). In Algorithm 2 we currently have “k” and “i” as variables. Let us create an upper bounded inequality with only the variable k.

Upper Bound Algorithm 2

We can use the fact that:

Comparing

We can see that algorithm 1 has a worst case of and algorithm 2 has a worst case of . Algorithm 2 thereby beats algorithm 1. There isn’t a algorithm which is faster (according to the prof).

Mathematical Induction

Mathematical induction is a powerful proof technique used to establish that a given statement is true for all natural numbers. The idea is similar to setting up a chain of falling dominoes: if you can show that the first domino falls (the base case) and that any domino will knock over the next one (the induction step), then you’ve proven that all dominoes will fall. Induction is a powerful tool in Algorithms and Data structures.

Steps of Induction

- Base Step: Prove that the statement is true for the initial value (usually ).

- Inductive Hypothesis: Assume that the statement is true for some arbitrary value .

- Inductive Step: Using the inductive hypothesis, prove that the statement is true for .

Example: Sum of the First Natural Numbers

Let’s prove the formula for the sum of the first natural numbers:

Step 1: Base Case

For , the left-hand side is simply . Plugging into the formula:

So, the base case holds.

Step 2: Inductive Hypothesis

Assume that the formula is true for some :

Step 3: Inductive Step

Now we need to show that the formula holds for . Consider:

By the inductive hypothesis, the sum of the first terms is:

Factor out :

This matches the formula for :

Thus, by mathematical induction, the formula is true for all natural numbers .

Example: Sum of Powers of 2

Let’s prove the formula for the sum of the first powers of 2:

Step 1: Base Case

For , the left-hand side is:

Plugging into the formula:

So, the base case holds.

Step 2: Inductive Hypothesis

Assume that the formula is true for some :

Step 3: Inductive Step

Now we need to show that the formula holds for . Consider:

By the inductive hypothesis, the sum of the first terms is:

Combining these, we have:

This matches the formula for :

Thus, by mathematical induction, the formula is true for all natural numbers :

Continue here: 02 Star Search