Lecture from 30.10.2024 | Video: Videos ETHZ

Vector Spaces, Bases and Dimension

Our goal is to thoroughly understand how vector spaces are generated. A central concept in this pursuit is the basis, which represents a minimal set of linearly independent vectors capable of creating any other vector in the space through linear combinations.

Linear Combinations

Before we delve into bases, we must understand their fundamental components: linear combinations.

Linear Combination (Definition 4.13)

Let be a vector space and be a subset of vectors (potentially infinite). A linear combination of is a sum of the form:

where is a finite subset of , and for all . The restriction to a finite subset is crucial, as we’ll see later.

Example: Consider the set . Valid linear combinations of include:

Closure under Linear Combinations (Lemma 4.14)

Let be a vector space and . Every linear combination of remains within the vector space .

Proof: We demonstrate this closure property using the axioms of a vector space and an inductive argument.

-

Setup: Consider a finite subset of and a general linear combination:

-

Scalar Multiplication: By the definition of a vector space, scalar multiplication is a closed operation within . Thus, for each , the product is also a vector in .

-

Closure under Addition: Vector spaces are closed under addition. Consequently, the sum of any two vectors in , such as and , will also reside in .

-

Induction: We use induction to generalize this to any finite sum.

- Base Case (n=1): A linear combination of a single vector, , is in (from step 2).

- Inductive Step: Assume that the sum of vectors, , is in . We need to show that adding the -th vector, , also results in a vector in . By closure under addition (step 3), since and , their sum is also in .

-

Conclusion: By induction, any finite linear combination of vectors from (and therefore, from any finite subset of ) remains within .

Why Infinite Linear Combinations Can Fail:

The previous lemma specifically addresses finite linear combinations. Infinite linear combinations may produce results that lie outside the original vector space.

Example: Consider the vector space of polynomials with real coefficients in one variable . While each individual monomial () belongs to , the infinite sum:

converges to a rational function (for ), which is not a polynomial and therefore not in .

This emphasizes the importance of restricting our discussion to finite linear combinations when defining spans and bases.

Span and Linear Independence: Key Concepts

Definition 4.15 (Span and Linear Independence): Let be a vector space and .

-

Span(): The set of all possible finite linear combinations of vectors in . Formally:

-

Linear Independence: is linearly independent if no vector can be expressed as a linear combination of the other vectors in . Equivalently, is linearly independent if the only way to obtain the zero vector as a linear combination of vectors in is by setting all coefficients to zero.

-

Linear Dependence: is linearly dependent if at least one vector can be expressed as a linear combination of the other vectors in .

See: 03 Linear Dependence and Independence for more info.

Basis: A Minimal Generating Set

Basis (Definition 4.16)

A subset is a basis for vector space if:

- is linearly independent.

- Span() = (every vector in is a linear combination of vectors in ).

A basis provides a minimal and efficient way to represent every vector in the vector space.

Bases in ℝᵐ (Observation 4.18):

Any set of linearly independent vectors in forms a basis for . This is a direct consequence of Theorem 3.11 (the Inverse Theorem, see Inverse Theorem (Theorem 3.11)), which states that a square matrix with linearly independent columns is invertible.

If we form a matrix whose columns are our linearly independent vectors, then for any vector , the equation has a unique solution . This solution provides the coefficients for expressing as a linear combination of the columns of (i.e., our original vectors). Thus, these vectors span and, being linearly independent, form a basis.

The Steinitz Exchange Lemma: A Foundation for Dimension (Lemma 4.19)

The Steinitz Exchange Lemma is a crucial result that connects linearly independent sets and spanning sets in a vector space. It’s essential for understanding the concept of dimension.

Note for readers: Here is a video explaining the content in a much better way IMO.

Let be a vector space, a finite, linearly independent set, and a finite spanning set (i.e., Span() = ). Then:

- .

- There exists a subset with such that Span() = . (Note: can contain elements of ).

Proof (by Induction on |F|):

-

Base Case (|F| = 0):

- is trivially true.

- Choose . Then Span() = Span() = Span() = .

-

Inductive Hypothesis: Assume the lemma holds for any linearly independent set with .

-

Inductive Step (|F| = k):

-

Choose and Remove: Select any and let . remains linearly independent.

-

Apply Hypothesis: Since , the inductive hypothesis applies:

- .

- There exists with such that Span() = .

-

Express u: Since spans , we can write as a linear combination: Since is linearly independent, cannot be expressed solely using vectors from (otherwise, it would be a linear combination of the other vectors in F). Therefore, at least one for some .

-

Exchange: Choose such a with . Let . Now, .

-

Show Spanning: We need to prove Span() = .

- From the equation for above, since , we can rearrange to express as a linear combination of and vectors in . This means Span().

- Any vector can be expressed using vectors in . We can substitute the expression for in terms of into the representation for . This shows that can be expressed using only vectors from , and therefore Span() = .

-

This completes the induction, proving the Steinitz Exchange Lemma.

Uniqueness of Basis Size and Dimension

Theorem 4.20 (Uniqueness of Basis Size): Let be a finitely generated vector space. If and are both finite bases for , then .

Proof: Apply the Steinitz Exchange Lemma twice:

- With and , we get .

- With and , we get . Therefore, .

Definition 4.23 (Dimension): The dimension of a finitely generated vector space , denoted dim(), is the number of vectors in any basis for . (Note: dim() = ).

Existence of a Basis: Simplified Basis Criterion (Lemma 4.24)

Let be a vector space with dim() = .

- If contains linearly independent vectors, then is a basis for .

- If contains vectors and Span() = , then is a basis for .

Proof:

- Let be a basis for (so ). Apply the Steinitz Exchange Lemma with and . Since , the exchange set is empty. Thus, Span() = Span() = Span() = , so is a basis.

- By Theorem 4.22, there exists a basis with . Since as well, we must have , meaning itself is a basis.

This lemma simplifies checking if a set of vectors forms a basis: If the set has the correct number of vectors (equal to the dimension), then we only need to verify either linear independence or that it spans the space.

Example: The Vector Space of Polynomials in n Variables

Let’s explore a specific example of a vector space: the set of all polynomials in n variables with a degree at most d. This will illustrate the concepts of basis and dimension in a more concrete setting.

Note for readers: In this part the professor rushed through quite quickly and it was quite confusing, I’ve no clue if this part here is correct and makes sense. If you find a mistake let me know in the comments, or write me an email

¯\_(ツ)_/¯

Definitions (Monomial, Degree, Polynomial)

-

A monomial in n variables is an expression of the form: where is a vector of non-negative integer exponents.

Example:

- In two variables, is a monomial with

- In three variables, is a monomial with

-

The degree of a monomial is the sum of its exponents: Example:

- The degree of is .

- The degree of is .

-

A polynomial in n variables of degree at most d is a real linear combination of monomials with degrees up to d. Formally: where are the coefficients, and is the set of all possible exponent vectors such that the degree is at most d:

Example:

- A polynomial in two variables (x and y) of degree at most 2 could look like: .

Operations on Polynomials:

We define addition and scalar multiplication on polynomials as follows:

- For and , and a scalar :

- Scalar Multiplication: Example: If and , then .

- Addition: Example: If and , then .

Vector Space of Polynomials

The set of all polynomials in n variables of degree at most d, denoted by , is a vector space of dimension .

Proof:

-

Monomials as a Basis: The set of all monomials forms a basis for . This is because:

-

Spanning: By definition, every polynomial in is a linear combination of these monomials. Example:

- In two variables (x and y) with degree at most 2, we can take the set of monomials: . Any polynomial with degree at most 2 can be formed by a linear combination of these monomials.

-

Linear Independence: No monomial in the set can be expressed as a linear combination of the other monomials. This can be seen by considering the unique combination of exponents in each monomial. Example:

- Consider the monomials and . The exponent vectors are and , respectively. There is no way to combine with other monomials (like 1, , or ) to get because they have different exponent vectors.

-

-

Dimension: Since the set of monomials is a basis, the dimension of the vector space is equal to the number of elements in this basis, which is . Example:

-

Consider polynomials in two variables (x and y) with degree at most 2. . Therefore, , and the dimension of this space of polynomials is 6.

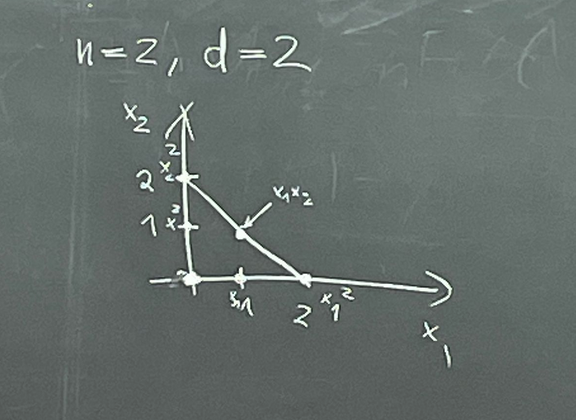

Visual example:

-

Therefore, .

This example demonstrates how the concepts of basis and dimension apply to a specific, infinite-dimensional vector space. Note that even though the vector space itself is infinite-dimensional if we allow any degree, once we constrain the space by limiting the maximum degree to d, the resulting subspace is finite-dimensional.

The choice of a basis—in this case, the monomials—provides a structured and manageable way to work with these polynomials, and the dimension tells us how many “degrees of freedom” we have when constructing polynomials within this space.

Continue here: 14 Fundamental Subspaces, Column Space, Row Space, Nullspace