Lecture from 15.11.2024 | Video: Videos ETHZ

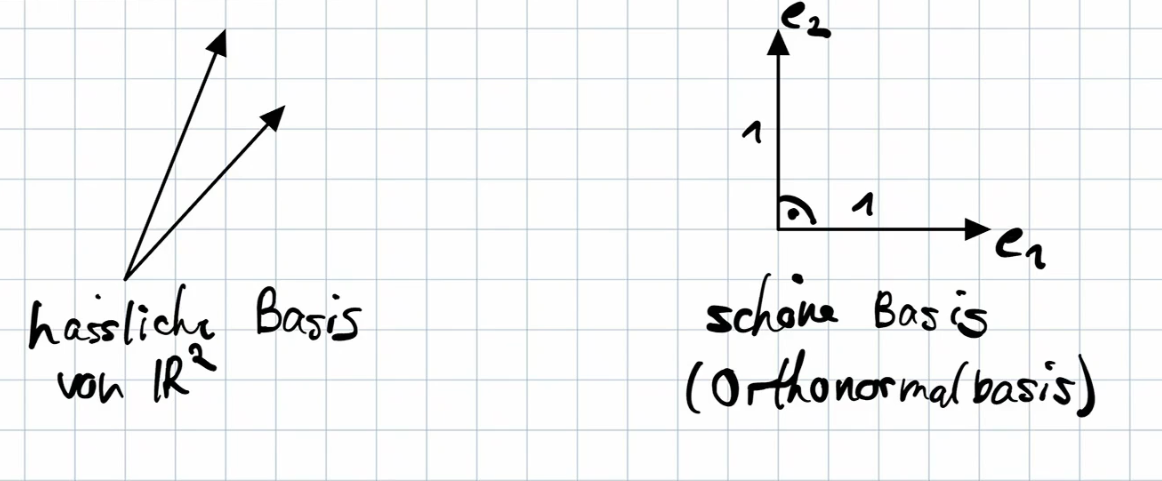

Orthonormal Bases

Orthonormal Vectors (Definition 5.4.1)

Vectors are orthonormal if they are pairwise orthogonal and have unit length (norm 1). Mathematically:

where is the Kronecker delta.

- Matrix Form: If is a matrix whose columns are the vectors, then the vectors are orthonormal if and only if (where is the identity matrix. Note that is the entry in the -th row and -th column of ).

- Non-Square Matrices: need not be square. If it is not square, does not necessarily hold (though does).

- Example: The standard basis vectors in are orthonormal.

Orthogonal Matrices (Definition 5.4.3)

A square matrix is orthogonal if . This implies:

- The columns of form an orthonormal basis for .

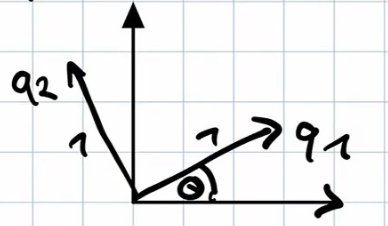

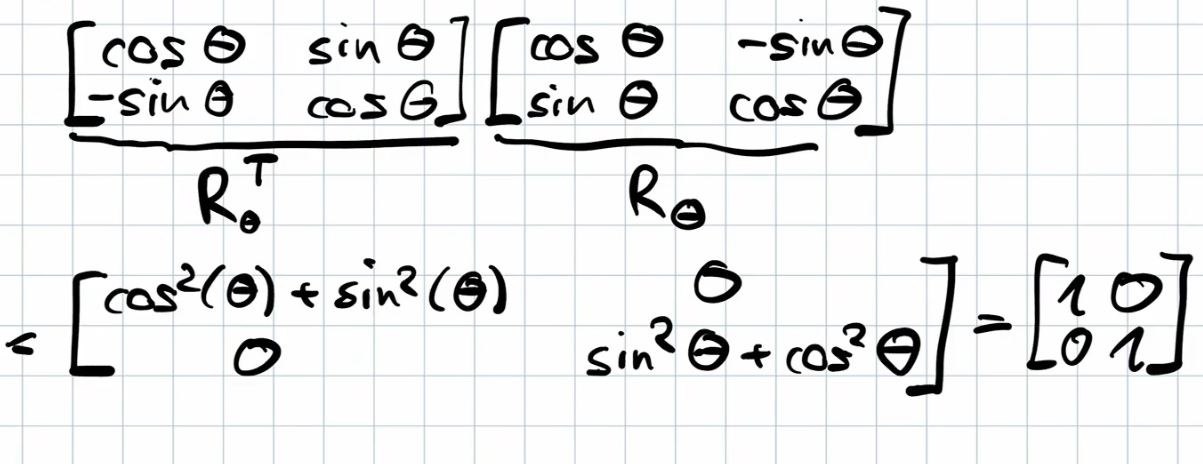

2x2 Rotation Matrix (Example 5.4.4)

The 2x2 rotation matrix : rotates a vector counter-clockwise by angle and is an orthogonal matrix (verify ).

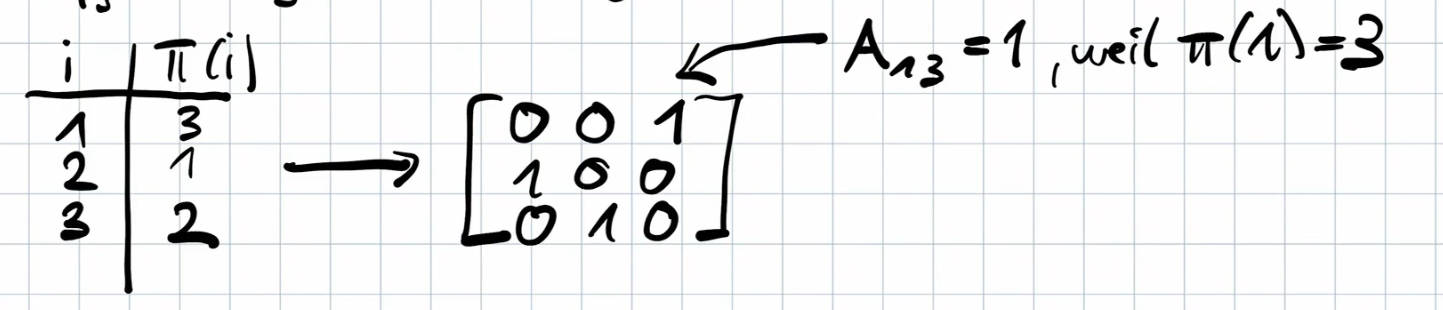

Permutation Matrices (Example 5.4.5)

A permutation matrix is a square matrix where each row and column contains exactly one entry of 1 and all other entries are 0. They represent permutations of vector components. Permutation matrices are orthogonal.

-

Construction: The permutation matrix A corresponding to a permutation and bijective transformation has entries if and otherwise.

-

Property: For every permutation matrix A, there exists a positive integer such that .

Properties of Orthogonal Matrices (Proposition 5.4.6)

Orthogonal matrices preserve norms and inner products (scalar products). That is, for all :

(i) (ii)

Proof:

- (ii)

- (i) . Taking the square root of both sides gives .

Projections with Orthonormal Bases (Proposition 5.4.7)

Let be a subspace of , and let be an orthonormal basis for . Let .

(i) For all , the projection of onto is given by , where . (ii) The least squares solution of is .

Proof:

- (i) By Theorem 5.2.6, . Since has orthonormal columns, , so .

- (ii) The least squares solution is given by . Since , we have .

Orthogonal Matrices and Rotations

Orthogonal matrices, especially those with determinant 1 (known as special orthogonal matrices), represent rotations in . While general orthogonal matrices can also encompass reflections, rotations are their fundamental transformation.

Key Properties of Orthogonal Matrices:

-

Inverse equals Transpose: A defining characteristic of an orthogonal matrix is that its inverse equals its transpose: . This is crucial for understanding their connection to rotations. (See proof in previous responses.)

-

Transpose/Inverse of a Product: For any matrices A and B (of compatible dimensions), and .

-

Product of Orthogonal Matrices: If A and B are orthogonal, then (AB) is also orthogonal: .

Why Orthogonal Matrices Represent Rotations:

-

Preservation of Length (Norm): Orthogonal matrices preserve vector lengths. If is a vector and is orthogonal, . This is a defining feature of rotations. (See proof above.)

-

Preservation of Inner Products (and Angles): Orthogonal matrices preserve inner products. If is orthogonal, . Since angles between vectors are determined by their inner products and norms (both preserved by ), orthogonal matrices preserve angles.

-

Orthonormal Basis Transformation: The columns (and rows) of an orthogonal matrix constitute an orthonormal basis. When acts on the standard basis vectors, it rotates them to a new orthonormal basis, effectively rotating the entire coordinate system. Proof: Let be a standard basis vector. is the -th column of , which is a vector in the new orthonormal basis.

-

Determinant: The determinant of an orthogonal matrix is either 1 or -1. Proof: Since , taking the determinant of both sides gives . Because , we have , so . A determinant of 1 indicates a pure rotation, while -1 signifies a rotation combined with a reflection. Note: We didn’t look at this in the lecture…

Example in :

The standard rotation matrix rotates vectors counterclockwise by . It’s straightforward to confirm and , proving it’s a rotation.

Additional Properties

When is square (), , and can be written as:

This shows is a linear combination of the basis vectors where the coefficient of is :

In the special case where (the standard basis vectors), we recover .