Lecture from 25.10.2024 | Video: Videos ETHZ

Vector Spaces

What exactly is a vector? A common initial thought might be an element of . While true, this is merely an example of a vector, not a definition. We need a more precise and encompassing understanding.

Definition of a Vector Space (Definition 4.1)

A vector is an element of a vector space. Vector spaces are characterized by two key operations on their elements: vector addition and scalar multiplication. These operations must adhere to specific rules to ensure the space behaves in a consistent and predictable manner.

Formal Definition: A vector space is a triple where:

- : A set containing the vectors.

- : A function representing vector addition. It takes two vectors from and returns another vector in .

- : A function representing scalar multiplication. It takes a real number (scalar) and a vector from and returns another vector in .

These operations must satisfy the following eight axioms for all and all :

- Commutativity of addition:

- Associativity of addition:

- Existence of a zero vector: There exists a vector such that for all .

- Existence of additive inverses: For every , there exists a vector such that .

- Scalar multiplication identity:

- Compatibility of scalar multiplication:

- Distributivity of scalar multiplication over vector addition:

- Distributivity of scalar multiplication over scalar addition:

Examples of Vector Spaces

We’ll now explore concrete examples of vector spaces, clarifying how the abstract definition applies in specific cases.

The Real Coordinate Space: (Observation 4.2)

The set consists of all -tuples of real numbers. We represent elements (vectors) in as ordered lists:

, where .

Vector addition and scalar multiplication are defined component-wise:

- Addition (Definition 1.1): If and , then . For example, in , .

- Scalar Multiplication (Definition 1.3): If and , then . For example, in , .

forms a vector space because these operations satisfy all eight axioms of a vector space. is often the first and most familiar example encountered when learning about vector spaces.

The Space of Polynomials: (Lemma 4.4 and Definition 4.3)

The set contains all polynomials with real coefficients. A polynomial is expressed as:

, where . The largest such that is the degree of the polynomial. The zero polynomial (all ) has a degree of -1 by convention (Definition 4.3).

Polynomial addition and scalar multiplication are defined as:

-

Addition: where has degree and has degree . Example: .

-

Scalar Multiplication: . Example: .

constitutes a vector space (Lemma 4.4), satisfying all eight axioms. This example demonstrates that vector spaces can encompass more abstract objects than just tuples of numbers or matrices. The fact that polynomials form a vector space is a consequence of the way addition and scalar multiplication are defined for polynomials, which align with the axioms of a vector space.

This approach integrates the definition of a polynomial (Definition 4.3) directly into the explanation of why the set of all polynomials forms a vector space (Lemma 4.4). This makes the connection between the two concepts clearer and avoids redundancy.

The Space of Real Matrices: (Lemma 4.5)

The set comprises all matrices with real entries. Matrices are rectangular arrays of numbers:

, where .

Standard matrix addition and scalar multiplication are defined as:

-

Addition (Definition 2.2): If and are two matrices, then . For example, .

-

Scalar Multiplication (Definition 2.2): If is an matrix and , then . For example, .

forms a vector space, as these operations fulfill all the vector space axioms.

Uniqueness of the Zero Vector (Fact 4.6)

Within any vector space, the zero vector is unique. This can be proven directly from the axioms.

Proof: Assume that there are two distinct zero vectors, denoted as and . We will show that they must be equal.

- Using Axiom 3: Since is a zero vector, we have for any vector in the space.

- Using Axiom 3 for : Similarly, since is a zero vector, we have for any vector in the space.

- Setting and : Let’s apply these properties with and :

- (using Axiom 3 for and )

- (using Axiom 3 for and )

- Applying Commutativity (Axiom 1): The left-hand sides of these equations are equal by the commutativity of addition (Axiom 1): .

- Therefore: Combining the results, we get .

Therefore, our initial assumption that and are distinct zero vectors is false. This proves that the zero vector in any vector space must be unique.

Abuse of Notation:

In the context of vector spaces, we often use the notation to represent the entire vector space, emphasizing that it is the set together with the specific operations of vector addition () and scalar multiplication (). This notation highlights the fundamental components that define a vector space.

However, for brevity and convenience, we often “abuse notation” and simply write to refer to the entire vector space, implicitly assuming that the associated operations ( and ) are understood. This convention is common in linear algebra and allows for more concise expression.

For instance, instead of saying “Let be a vector space,” we might simply say “Let be a vector space.” It is understood from context that refers to a set equipped with specific addition and scalar multiplication operations.

While technically an abuse of notation, this simplification does not introduce ambiguity in most contexts and makes the presentation more compact.

Subspaces (Definition 4.8)

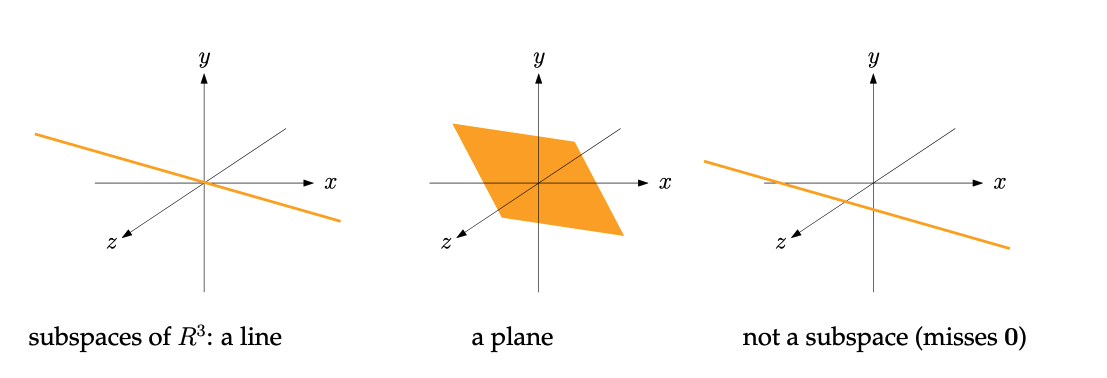

The concept of a subspace is crucial to understanding vector spaces. A subspace is a subset of a vector space that is itself a vector space under the same operations. This means that the subset must be closed under vector addition and scalar multiplication.

Formal Definition: Let be a vector space. A nonempty subset is a subspace of if the following two axioms hold for all and all :

(i) Closure under addition: ; (ii) Closure under scalar multiplication: .

Important Note: The zero vector always belongs to a subspace . This follows directly from axiom (ii): for any , we have .

Examples:

- The zero subspace: The set containing only the zero vector, {0}, is always a subspace of any vector space.

- Lines and Planes in : In three-dimensional space, lines and planes passing through the origin are subspaces. They are closed under vector addition and scalar multiplication, meaning that the sum of two vectors on the line/plane remains on the line/plane, and scaling a vector on the line/plane keeps it on the line/plane.

Visualizing Subspaces:

- Lines: Subspaces of representing lines through the origin are the simplest example.

- Planes: Planes passing through the origin also form subspaces of .

- Not a Subspace: A line or plane not passing through the origin is not a subspace. It fails to contain the zero vector and, therefore, violates the closure properties.

Subspaces of (Lemma 4.11)

Lemma 4.11: Let be an matrix. Then (the column space of ) is a subspace of .

Proof:

- Closure under addition: Let , which means there exist such that and . Then, . Since , we have .

- Closure under scalar multiplication: Let (so for some ) and let . Then, . Since , we have .

Therefore, satisfies both axioms and is a subspace of .

Subspaces and Vector Spaces (Lemma 4.12)

Lemma 4.12: Let be a vector space and a subspace of . Then is also a vector space (with the same “+” and “·” as ).

Proof: The proof follows directly from the definition of a subspace. Since is a subspace, it satisfies the closure axioms under addition and scalar multiplication, which are the same operations as in . The remaining axioms (commutativity, associativity, etc.) also hold in because they hold in , and is a subset of .

Subspaces of…

Now, let’s examine some specific examples of subspaces within various familiar vector spaces.

… : The Space of Polynomials

The polynomials without constant term:

The set of polynomials of the form (where ), forms a subspace of . It is closed under addition and scalar multiplication:

- Adding two polynomials without constant terms results in another polynomial without a constant term.

- Multiplying a polynomial without a constant term by a scalar also yields a polynomial without a constant term.

The quadratic polynomials:

The set of quadratic polynomials of the form forms a subspace of . This is a special case of the polynomials of degree less than or equal to , which also form a subspace.

Furthermore, this subspace is isomorphic to .

Isomorphism means that there exists a one-to-one and onto mapping (a bijection) between the two spaces that preserves the structure of the spaces. In other words, the two spaces “behave” the same way, even though their elements are represented differently.

For quadratic polynomials, the isomorphism is given by:

This mapping preserves addition and scalar multiplication:

Therefore, we can think of quadratic polynomials as being “equivalent” to vectors in . They have the same structure and behave in the same way under the operations of addition and scalar multiplication.

Generalization:

The set of polynomials of degree less than or equal to (where is an integer) also forms a subspace of . This can be generalized further: the set of polynomials with a degree less than or equal to any fixed integer always forms a subspace of .

Using the same idea as for quadratic polynomials, of degree is isomorphic to .

… : The Space of Matrices

The symmetric matrices:

The set of matrices of the form (where ) forms a subspace of . Adding two symmetric matrices results in another symmetric matrix, and scaling a symmetric matrix by a scalar also produces a symmetric matrix.

The matrices of trace 0:

The set of matrices of the form (where ) forms a subspace of . The trace of a matrix is the sum of its diagonal elements.

… (General Case)

It’s important to understand that many subspaces are not explicitly named or described in detail. For instance, in , you can have:

- Lines and Planes: As we saw earlier, these can be subspaces if they pass through the origin.

- The Span of a set of vectors: If you have a set of vectors in , their span (the set of all possible linear combinations of those vectors) is always a subspace.

These examples illustrate that subspaces are abundant and often represent interesting and meaningful subsets within vector spaces. The concept of subspaces is crucial for analyzing, understanding, and solving problems in linear algebra.

Continue here: 13 Vector Spaces, Bases, Dimension