Lecture from 08.11.2024 | Video: Videos ETHZ

Orthogonal Complementary Subspaces

Recall that for a subspace of , its orthogonal complement is defined as:

is also a subspace of .

Key Properties of Orthogonal Complements:

If is a basis for and is a basis for , then:

- Dimensionality: (The dimensions of a subspace and its orthogonal complement add up to the dimension of the ambient space).

- Basis of : forms a basis for .

- Unique Decomposition: Every vector can be uniquely expressed as , where and .

Also recall the crucial relationship between the nullspace and row space: .

Decomposition of

Lemma: Double Orthogonal Complement

For any subspace of , . (The orthogonal complement of the orthogonal complement of a subspace is the subspace itself.)

Proof

Let and be bases for and , respectively. We know .

By definition of orthogonal complements, for all and . Consider : the set of all vectors orthogonal to every vector in . Since , each belongs to . Therefore, .

Now, and . Since is contained within and they have the same dimension, we conclude .

Corollary: Decomposition of

For any subspace of , . This means any vector in can be written as the sum of a vector in and a vector in .

The Set of All Solutions to a System of Linear Equations

Corollary: Nullspace and Column Space of Transpose

For a matrix , and .

Understanding Linear Systems

Consider the subspaces (the nullspace of ) and (the row space of ). These are orthogonal complements in . Consequently, any vector can be uniquely decomposed as , where and .

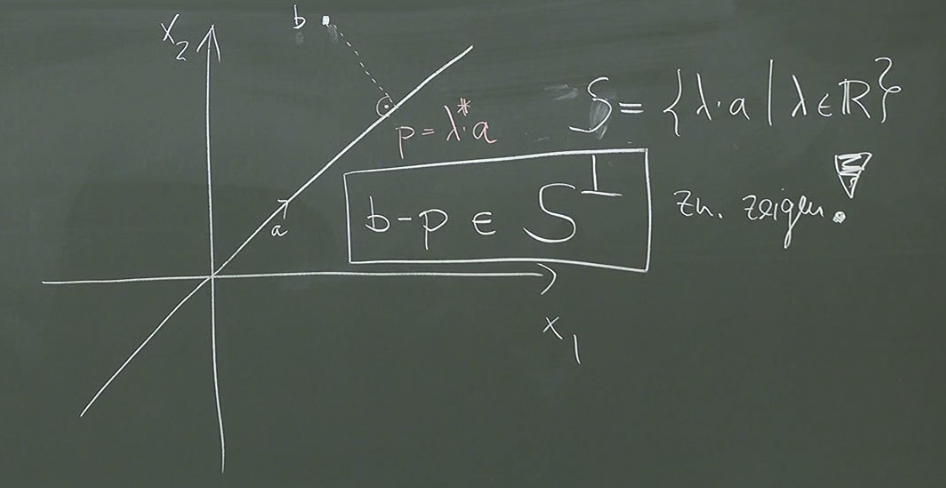

Theorem: Solution Set of

The set of all solutions to the linear system is given by , where is a particular solution satisfying .

Proof

-

If is a solution, then . This means , so for some , and thus .

-

Conversely, if , then for some . Then , showing that is indeed a solution.

A Link Between the Nullspaces of and

Lemma: Nullspaces of and

For any matrix , the following holds:

- (The nullspaces of and are identical.)

- (The column space of is the same as the column space of .)

Proof:

-

:

- : If is in the nullspace of (), then . This means is also in the nullspace of .

- : If is in the nullspace of (), pre-multiplying by gives . The squared norm of a vector is zero if and only if the vector itself is zero. Thus, , meaning is in the nullspace of .

-

: We know from previous results that (the column space of is the orthogonal complement of the nullspace of ) and . Since we’ve just proven that , their orthogonal complements must also be equal. Therefore, .

Projections

Note for readers: This is a much more intuitive and simpler explanation of this part…

Definition: Projection of a Vector onto a Subspace

The projection of a vector onto a subspace is the point closest to . Formally:

This is well-defined if the minimum exists and is unique.

The One-Dimensional Case

Lemma: Projection onto a Line

Let . The projection of onto the line is given by:

Here’s how we derive this formula, starting with the geometric intuition that the error vector ( minus its projection) is orthogonal to :

-

Orthogonality Condition: The projection of onto the line spanned by is some scalar multiple of . Let’s call this scalar . So, the projection is . The error vector is . This error vector must be orthogonal to . Mathematically, this orthogonality is expressed as:

-

Solving for : Expanding the dot product gives:

Solving for :

-

The Projection Formula: Substituting this value of back into the expression for the projection () gives:

The second form arises from recognizing that is a scalar, and scalar multiplication is commutative. is often called the projection matrix (for projection onto the line spanned by ).

Proof

Let , so for some scalar . Our goal is to find the value of that minimizes the distance between and , which is equivalent to minimizing the squared distance .

-

Expanding the Squared Distance: We expand the squared distance using the dot product:

Distributing the terms gives:

Since and are both scalars and equal to each other (dot product is commutative), we can simplify this to:

Let’s call this expression .

-

Minimizing : To find the minimum value of , we take its derivative with respect to and set it equal to zero:

-

Solving for : Now we solve for :

This value of minimizes the squared distance. Let’s call it .

-

The Projection: Substitute back into the expression for the projection :

This can also be written as:

because is a scalar and can be moved to the left of . is a matrix, and is a scalar.

Intuition and Check

-

Orthogonality of the Error Vector: The error vector is orthogonal to . You can verify this by computing . This orthogonality is a fundamental property of projections.

-

Projection of a Collinear Vector: If is already a multiple of (i.e., lies on the line spanned by ), then the projection of onto should be itself. You can verify this using the formula. This makes intuitive sense, as the closest point on the line to a point already on the line is the point itself.

The General Case: Projection onto a Subspace

Lemma: Projection onto a Subspace

Let be an -dimensional subspace of , and let be a basis for . Form the matrix , so (meaning is the column space of ). The projection of a vector onto is given by , where satisfies the normal equations:

Proof

-

Decomposition of b: We can decompose into two components: , where is the projection of onto (so ), and is the error vector, which is orthogonal to (so ).

-

Minimizing the Distance: The projection is the point in that is closest to . This means we want to minimize the distance between and any arbitrary point in . We do this by minimizing the squared distance .

-

Considering another point in S: Let be any other point in . Since both and are in , their difference is also in . Because is orthogonal to , is orthogonal to . This means their dot product is zero:

-

Expanding the Squared Distance: Now, let’s expand the squared distance between and :

Using the Pythagorean theorem (which applies because and are orthogonal), we get:

Since is always non-negative, this entire expression is greater than or equal to :

-

The Minimum Distance: The inequality above shows that the squared distance between and any point in is always greater than or equal to , which is the squared distance between and . The minimum distance is achieved when .

-

Expressing the Projection: Since is in the column space of (), we can express as a linear combination of the columns of : for some vector .

-

Orthogonality and the Normal Equations: The error vector is orthogonal to . This means is orthogonal to every vector in , including the basis vectors that form the columns of . So, for each column :

This can be written compactly as:

Distributing gives us the normal equations:

Solving this system of equations for allows us to compute the projection .

Continue here: 17 Projections, Least Squares, Linear Regression