Lecture from 25.09.2024 | Video: Videos ETHZ

Related to: Linear Combinations

Vectors in are said to be linearly dependent if at least one of them is a linear combination of the others. This means there exists some index and scalars such that:

If no such scalars exist, the vectors are linearly dependent.

Examples

| Case | In/dependent | Explanation |

|---|---|---|

| Two vectors and | Independent | These two vectors are not scalar multiples of each other, so they are linearly independent. |

| Two vectors and | Dependent | is a scalar multiple of (), so they are linearly dependent. |

| Vectors in . Example: Vectors in | Dependent | Any set of vectors in is always linearly dependent, as either: 1) are independent and span and the the n-th vector is a combination of them or 2) are not independent and therefore won’t be either. |

| A sequence of a single non-zero vector | Independent | A single non-zero vector is always linearly independent because there is no other vector to form a linear combination. |

| A sequence of a single zero vector | Dependent | A single zero vector is always linearly dependent, since the only possible scalar is , and the zero vector can be written as . |

| Sequence of vectors including the zero vector | Dependent | Any sequence containing the zero vector is linearly dependent because the zero vector can be expressed as a linear combination of the other vectors (with all ). |

| Sequence of vectors where one vector appears twice or more | Dependent | If a vector appears twice, they are linearly dependent because one vector is a scalar multiple (identity) of the other. |

| The empty sequence | Independent | The empty sequence is trivially linearly independent since there are no vectors to form a linear dependence relation. |

Alternate Definitions of Linear Dependence

Let be vectors in . The following statements are equivalent (either all true or all false):

- At least one vector is a linear combination of the others (as in the main definition).

- There exist scalars , not all zero, such that: In other words, the zero vector is a non-trivial linear combination of the vectors.

- At least one vector is a linear combination of the preceding ones in the sequence .

How do we know these are true? Here are the proofs: The idea is that we prove: .

Proof: (i) (ii)

Assume (i) is true: one of the vectors, say , is a linear combination of the others. That is, there exist scalars such that:

Now, subtract from both sides:

This equation can be rewritten as:

Where and for all . Since , we have a non-trivial solution (not all are zero).

Thus, (ii) holds: there exist scalars, not all zero, such that their linear combination of the vectors equals the zero vector.

Proof: (ii) (iii)

Assume (ii) is true: there exist scalars , not all zero, such that:

Let be the largest index such that (this must exist, as not all are zero by assumption). We can now separate the term involving :

Now, isolate by moving the sum to the other side:

Next, divide through by (which is non-zero by assumption) to solve for :

Thus, can be written as a linear combination of the preceding vectors . This matches statement (iii): at least one vector (in this case, ) is a linear combination of the preceding vectors.

Therefore, (ii) implies (iii).

Proof: (iii) (i)

This implication is straightforward.

Assume (iii) is true: at least one vector, say , is a linear combination of the preceding vectors in the sequence :

Since is a linear combination of the other vectors in the set, it directly follows that there exists a vector, , which is a linear combination of the others. This is exactly what statement (i) asserts.

Thus, (iii) implies (i).

Definitions of Linear Independence

Let be vectors in . The following statements are equivalent for the vectors to be linearly independent (either all true or all false):

- No vector in the set can be written as a linear combination of the others.

- The only scalars that satisfy a linear combination of the vectors resulting in the zero vector are all zero (i.e., the trivial solution).

- No vector is a linear combination of the preceding ones in the sequence .

Uniqueness of Solutions with Linearly Independent Sets of Vectors

When a set of vectors in is linearly independent, any vector can have at most one unique solution when expressed as a linear combination of the vectors in that set.

Uniqueness of Solutions with Linearly Independent Sets of Vectors

When a set of vectors in is linearly independent, any vector can have at most one unique solution when expressed as a linear combination of the vectors in that set.

Proof of Uniqueness

Suppose can be written as a linear combination of the vectors in two different ways with two sets of coefficients, and , such that:

and

Now subtract the second equation from the first:

This simplifies to:

Since the vectors are linearly independent, the only solution to this equation is for each coefficient to be zero. That is:

Therefore, for all .

Conclusion

Since for all , the scalars (coefficients) used to express as a linear combination of must be the same. This proves that the solution is unique.

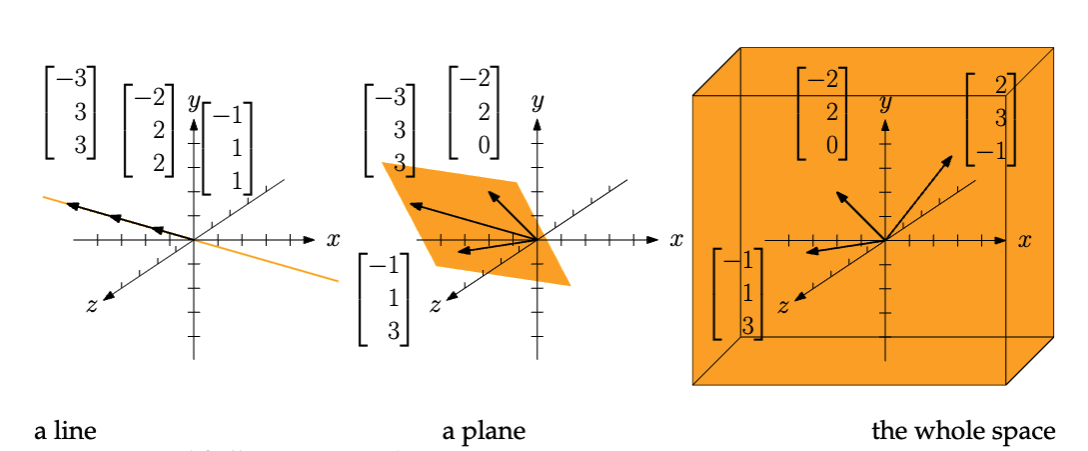

Vector Span

Definition

The span of a set of vectors in a vector space is the set of all possible linear combinations of those vectors. Mathematically, the span is defined as:

In other words, the span of a set of vectors is the collection of all vectors that can be formed by taking scalar multiples of each vector and adding them together.

Proof: Adding a Vector from a Span Does Not Change the Span

Let be vectors, and let be a linear combination of . We aim to show that:

Proof Strategy:

We will prove that the two spans are equal by showing:

Once both inclusions are established, we can conclude that the two spans are identical.

Part 1:

Let be an arbitrary element of . By definition, can be written as a linear combination of :

Since , we can set the coefficient of to be 0:

Thus, is also an element of . Therefore, every element of is in , proving:

Part 2:

Let be an arbitrary element of . By definition, can be written as a linear combination of :

Since , we know that can be expressed as:

Substitute this expression for into the equation for :

Now, simplify the expression:

This shows that is a linear combination of . Therefore, , proving:

Conclusion:

Since both:

we conclude that:

Continue here: 04 Matrices and Linear Combinations