Lecture from 01.11.2024 | Video: Videos ETHZ

Fundamental Subspaces of a Matrix

In linear algebra, we often work with matrices and the vector spaces associated with them. A key concept is that of a subspace, which is a subset of a vector space closed under vector addition and scalar multiplication. We can fully characterize a vector space by identifying its basis.

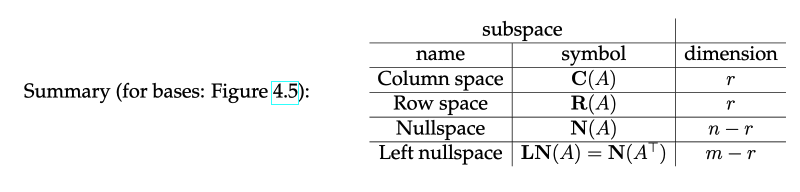

The four fundamental subspaces associated with a matrix :

-

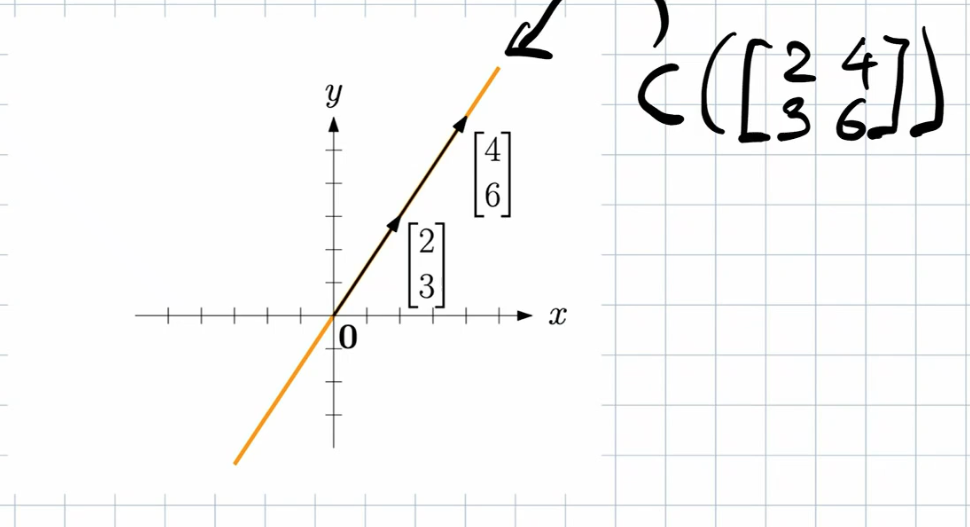

Column Space (): The column space of , denoted , is the subspace of spanned by the columns of . In other words, consists of all linear combinations of the columns of .

-

Row Space (): The row space of , denoted , is the subspace of spanned by the rows of . Equivalently, it is the column space of (the transpose of ).

-

Null Space (): The null space of , denoted , is the subspace of consisting of all vectors that satisfy the homogeneous equation . The null space represents the set of all inputs to the linear transformation represented by that produce the zero vector as output.

-

Left Null Space (): The left null space of , denoted , is the null space of . It is a subspace of . The left null space can be interpreted as the set of all vectors orthogonal to the column space of . This means that for any and any , their dot product is zero: . (Note we will not look at this)

We already have a tool with which we can calculate the basis for all of these subspaces: Gauss-Jordan-Elimination. Recall Gauss-Jordan-Elimination (see Gauss-Jordan Elimination for more):

Column Space

The column space of , denoted , is formally defined as:

It represents the set of all possible linear combinations of the columns of , and thus all possible outputs of the linear transformation represented by .

Finding a Basis for using Gauss-Jordan Elimination:

- Reduce to Reduced Row Echelon Form (RREF): Perform Gauss-Jordan elimination on matrix to obtain its RREF, denoted .

- Identify Pivot Columns: The pivot columns in correspond to the linearly independent columns of . The indices of these pivot columns are crucial.

- Extract Original Columns: The corresponding columns in the original matrix (not the RREF matrix ) form a basis for .

Theorem: Let be an matrix, and let be the RREF of with pivot column indices . Then the columns form a basis for , and .

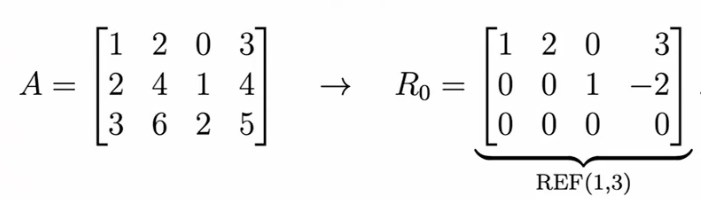

Example: Suppose After Gauss-Jordan elimination, we obtain the RREF:

The pivot columns are the first and third columns (, ). Therefore, the first and third columns of the original matrix form a basis for : The dimension of the column space is 2, which is also the rank of the matrix .

Proof: The independent columns of are a basis for and we get these independent columns by doing Gauss-Jordan.

Row Space

The row space of , denoted , is the subspace of spanned by the rows of . It is equivalent to the column space of the transpose of : .

Finding a Basis for using Gauss-Jordan Elimination:

- Reduce to RREF: Perform Gauss-Jordan elimination on matrix to obtain its RREF, denoted .

- Non-zero Rows form a Basis: The non-zero rows of form a basis for .

Theorem: Let be an matrix, and let be the RREF of . The non-zero rows of form a basis for , and .

Important Note: While the column space basis comes from the original matrix , the row space basis comes from the RREF matrix .

Example: Using the same matrix as in the column space example: and its RREF: The non-zero rows of form a basis for :

The dimension of the row space is 2, which is also the rank of . Note that although , the basis vectors for and are different and belong to different vector spaces ( and respectively).

Key Takeaway: Row operations preserve the row space but not the column space. The RREF simplifies the process of finding a basis for the row space.

Invariance of Row Space under Multiplication by an Invertible Matrix

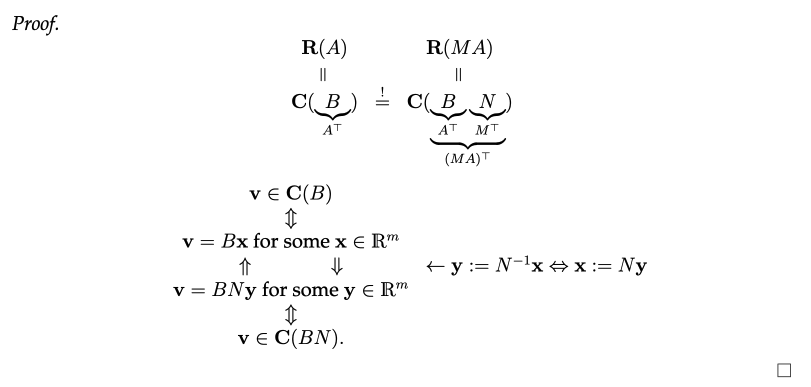

Lemma 4.27: Let be an matrix, and let be an invertible matrix. Then .

This lemma states that multiplying a matrix by an invertible matrix on the left does not change its row space.

Proof

The core idea is to understand the connection between row spaces and column spaces through transposes:

The core idea is to understand the connection between row spaces and column spaces through transposes:

- Row space is the column space of the transpose: .

We’ll use the following notation:

- (transpose of )

- (transpose of )

The proof revolves around showing that :

- Start with the row space of A: Consider any vector in the row space of ():

- Express it as a column space vector: Since , we can express as a linear combination of the columns of :

- Introduce M and relate to the row space of MA: To connect to the row space of , we introduce the invertible matrix . Since is invertible, its inverse exists. Let’s introduce a new vector related to by the equation .

This means (multiplying both sides by ). Now, we can rewrite in terms of : 4. Show v is in the column space of BN: Since is a linear combination of the columns of , it belongs to the column space of :

- Connecting to the row space of MA: Recall that because the transpose of a product is the product of the transposes in reverse order. Therefore, is the row space of :

- Equivalence: By following these steps, we’ve shown that:

- If is in , then it’s also in . This implies .

- Since is invertible, we can repeat the same logic using to show that .

- Combining both directions, we conclude that .

Finding a Basis for using REF

Theorem 4.28: Let be an matrix, and let be the REF of with pivot column indices . Then the first rows of form a basis for the row space , and .

Why This Works:

The theorem builds on the understanding of row operations, REF structure, and the connection between row spaces and column spaces through transposes. Let’s break down the key reasons why this theorem holds true:

- Row Operations Preserve Row Space: Gauss-Jordan elimination, which produces the REF, involves row operations. We already know from Lemma 4.27 (see Invariance of Row Space under Multiplication by an Invertible Matrix) that row operations (equivalent to multiplication by invertible matrices) don’t change the row space. Therefore, .

- Structure of RREF: The REF has the following key properties:

- Pivot Rows: The first rows of are the non-zero rows, where is the rank of .

- Zero Rows: The remaining rows are all zero.

- Linear Independence: The first rows of are linearly independent. This is because each row has a pivot position (leading 1) in a column that is unique to that row. No row can be expressed as a linear combination of the other rows.

- Spanning: The first rows of span the entire row space . This is because any linear combination of the original rows of can be rewritten as a linear combination of the rows of , thanks to the row operations.

By using row operations to put in REF, we achieve two things:

- We obtain a set of linearly independent vectors (the non-zero rows of ).

- These vectors span the entire row space .

Therefore, these vectors form a basis for the row space of .

Proof

Let be the result of Gauss-Jordan elimination on . We know that , where is a product of elementary matrices (invertible). By Lemma 4.27, .

The first rows of are the non-zero rows of . These rows are linearly independent because each row has a pivot position (leading 1) in a column that is unique to that row. This implies that no row can be expressed as a linear combination of the other rows.

We now show that the first rows of span . Let . Since , we can write as a linear combination of the rows of . Let be a vector containing the coefficients of this linear combination. Then, .

Since the first rows of are linearly independent, the remaining rows must be linear combinations of the first rows. Thus, we can rewrite as a linear combination of only the first rows of .

This shows that the first rows of span . Since they are also linearly independent, they form a basis for .

Since , we can conclude that the first rows of (which are the same as the first rows of ) form a basis for .

Example:

Consider the matrix and its REF from previous examples:

The first two rows of form a basis for :

We can conclude that and the rank of is 2.

Observation:

Row Rank Equals Column Rank

Theorem 4.29: Let be an matrix. Then .

Why This Works:

This theorem directly follows from the fact that the dimension of the column space of equals the dimension of the row space of , which in turn equals the dimension of the column space of .

- Dimensionality: We’ve established that and .

- Transpose Relationship: We also know that .

Putting these together, we have:

Therefore, the row rank of a matrix is always equal to its column rank.

This is a fundamental result in linear algebra, emphasizing the close relationship between row space and column space.

Nullspace

The nullspace (also called kernel) of a matrix , denoted , is the set of all vectors that satisfy the homogeneous equation . Formally:

Key Properties and Interpretations

- Subspace: is a subspace of . This can be easily verified by checking closure under addition and scalar multiplication.

- Solutions to Homogeneous System: The nullspace represents all solutions to the homogeneous system of linear equations represented by .

- Kernel of Linear Transformation: If represents a linear transformation, is the kernel of that transformation – the set of all vectors that map to the zero vector.

Invertible Matrices and Nullspace

Lemma 4.33: Let be an matrix, and be an invertible matrix. Then .

This lemma states that multiplying a matrix by an invertible matrix on the left does not change its nullspace.

Proof:

We need to show that any vector in is also in , and vice-versa.

- Suppose : This means . Now consider . Therefore, is also in . This shows .

- Suppose : This means . Since is invertible, we can multiply both sides by : , which simplifies to . Thus, is also in . This shows .

Since and , we conclude that .

This lemma is crucial for understanding why we can use the RREF of to find the nullspace of . The RREF is obtained by multiplying by a series of invertible elementary matrices. Thus, the nullspace of is the same as the nullspace of its RREF, which is much easier to compute.

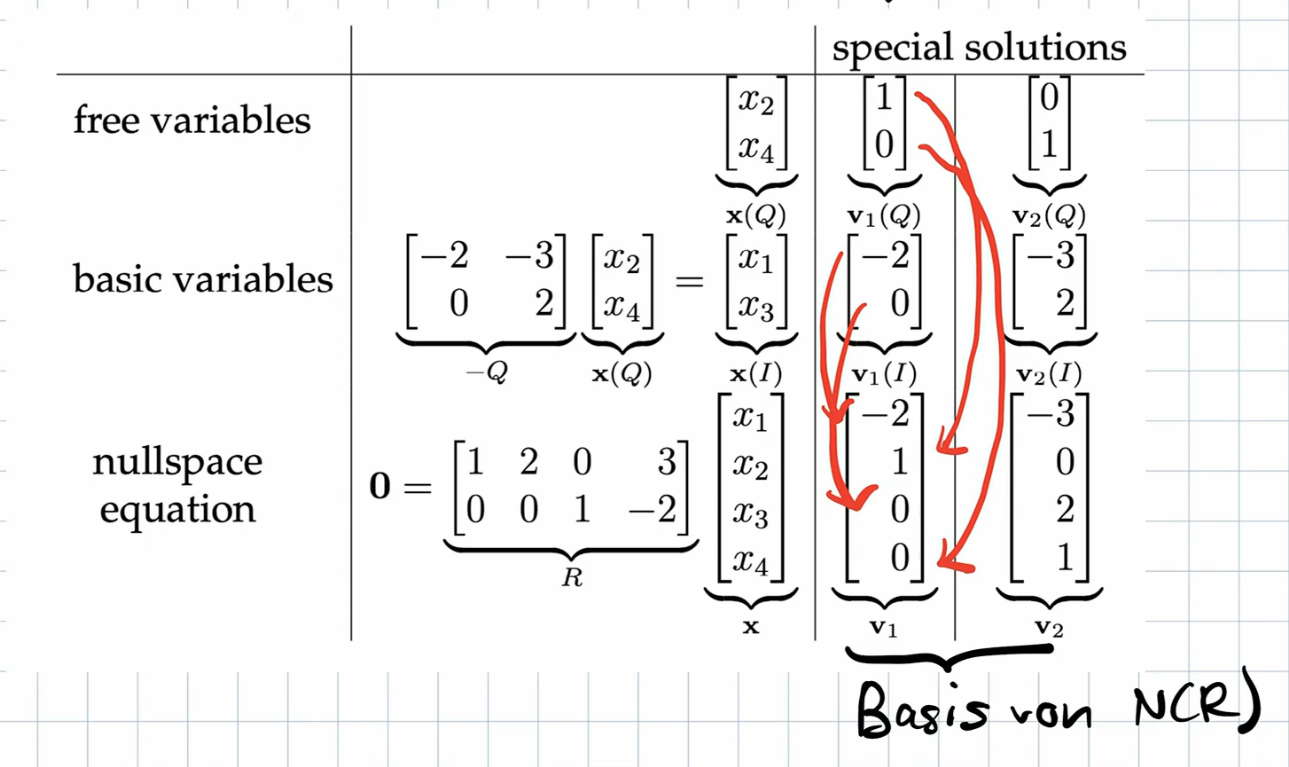

Finding a Basis for using Gauss-Jordan Elimination

Theorem 4.35:

- Reduce A to REF: Perform Gauss-Jordan elimination on to obtain its REF, denoted .

- Identify Free and Basic Variables: In the REF, identify the pivot columns and the corresponding basic variables. The remaining variables are the free variables.

- Express Basic Variables in terms of Free Variables: Express each basic variable as a linear combination of the free variables, based on the equations represented by the rows of .

- Form Special Solutions: For each free variable, create a special solution by setting that free variable to 1 and all other free variables to 0. Then, solve for the basic variables using the expressions derived in the previous step.

- Special Solutions Form a Basis: The set of special solutions forms a basis for .

Example

Let’s revisit our example matrix and its REF :

-

Basic and Free Variables: The pivot columns are 1 and 3, so and are basic variables. and are free variables.

-

Express Basic in terms of Free: From the REF, we have the equations:

Notice how we can choose our free variables and then read of .

-

Special Solutions:

-

Setting and , we get and . This gives the special solution .

-

Setting and , we get and . This gives the special solution .

- Basis for N(A): The basis for the nullspace is .

Therefore, , which is (number of columns minus the rank).

The Solution Space of

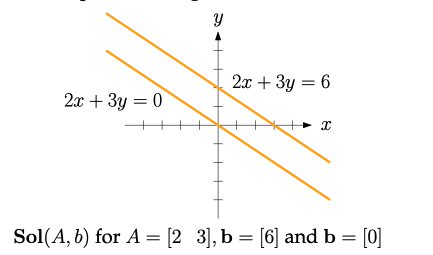

Definition 4.39: For a matrix and a vector , the solution space (or solution set) of the linear system is defined as: This set contains all vectors that satisfy the given linear system.

Key Properties and Considerations

- Subspace (only if b = 0): is a subspace of if and only if . If , then is not a subspace because it doesn’t contain the zero vector (since ).

- Affine Subspace (if b ≠ 0): When , and a solution exists, is called an affine subspace. It can be thought of as a subspace that has been “shifted” or “translated” away from the origin.

- Existence of Solutions: The solution space might be empty (i.e., no solutions exist) if is not in the column space of .

- Relationship to Nullspace: The solution space is closely related to the nullspace of . Theorem 4.40 formalizes this relationship.

Structure of the Solution Space

Theorem 4.40: Let be an matrix, and . Let be a particular solution of (if one exists). Then: This theorem states that the solution space consists of all vectors obtained by adding a particular solution to every vector in the nullspace of .

Proof:

We need to show that any vector in can be written in the form , where , and vice-versa.

-

Let : This means . Since is also a solution, . Subtracting these equations gives: This means is in the nullspace of . Let . Then , and . So, any solution can be written in the desired form.

-

Let , where : This means . Now, let’s check if is a solution to : Thus, is indeed a solution, so .

This proves that the solution set has the structure described in the theorem. If a particular solution exists, every other solution can be obtained by adding a vector from the nullspace to that particular solution. This highlights the fundamental relationship between the solution space of a non-homogeneous system and the nullspace of the corresponding matrix.

Intuition:

- : This represents one specific way to reach through the transformation defined by .

- : The nullspace captures all the directions along which you can move without changing the result (i.e., for ).

- : Adding vectors from the nullspace to the particular solution finds all other solutions. These represent variations of the particular solution that don’t affect the target .

Dimensions and Structure of Solution Spaces

-

Dimensionality of : When has a solution (i.e., the system is consistent), it has a “dimension” of , where is the rank of and is the number of columns in . This dimension intuitively represents the number of “degrees of freedom” in the solution space.

-

Parallelism to : The solution space can be viewed as a parallel translation of the nullspace . Imagine the nullspace as a subspace (or a “plane” in higher dimensions) passing through the origin. The solution space is obtained by shifting this “plane” by the particular solution .

-

Cases Based on :

-

(Full Rank): If the rank of equals the number of rows (), then the RREF of will have no rows full of zeros. This means that the system (where is the RREF of and is obtained from through the same row operations) will always have a solution. Consequently, the original system will also have a solution. In this case, the solution space is non-empty, and its dimension is , which could be 0 or greater. If it is 0, there is only one solution.

-

(Rank Deficiency): When the rank of is less than the number of rows (), this means the column space has a lower dimension than the space it’s embedded in (). Imagine as a “plane” (or a “line” in a 3D space) within a higher-dimensional space. Since is “smaller” than the space it occupies, there will be many vectors that lie outside of this “plane”. For these vectors , there’s no way to express as a linear combination of the columns of , meaning there’s no solution to the system .

Example: Rank Deficiency

Continue here: 15 Orthogonal Vectors and Orthogonal Complements of Subspaces