Lecture from 20.11.2024 | Video: Videos ETHZ

This lecture covers the Gram-Schmidt process for orthonormalizing a set of vectors and the concept of the pseudoinverse, a generalization of the matrix inverse for non-square or singular matrices.

Preparations for the Gram-Schmidt Process

Task

Given a subspace of spanned by a basis (i.e., ), our goal is to construct an orthonormal basis for .

The Idea (Two Vectors)

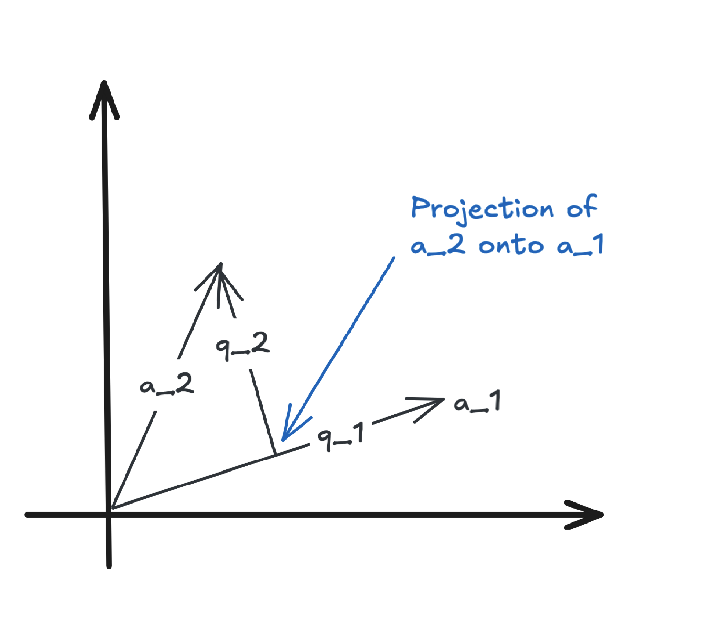

To illustrate the Gram-Schmidt process, consider a subspace spanned by two vectors and .

Steps:

-

Normalization: Normalize the first vector to obtain:

-

Orthogonalization: Subtract the projection of onto from : This ensures is orthogonal to .

- Key observation: because and are linearly independent.

-

Normalization: Normalize to obtain:

Claim: and are orthonormal.

-

Normalization: By construction, and .

-

Orthogonality: By definition of , the projection of onto is removed, leaving orthogonal to . To show this explicitly:

Expanding this:

Since and , this simplifies to:

Thus, and are orthogonal, and since they are also normalized, they form an orthonormal set.

The Gram-Schmidt process works by systematically orthogonalizing and normalizing vectors in a given basis. This ensures the resulting vectors are both orthogonal and of unit length, creating an orthonormal basis for the subspace .

The process can be extended to any number of vectors, ensuring that each new vector is orthogonal to all previously processed vectors in the set.

The Gram-Schmidt Process

The Gram-Schmidt process is an algorithm for orthonormalizing a set of linearly independent vectors. It transforms a set of vectors that span a subspace into an orthonormal basis for the same subspace.

Intuition

The process works by iteratively constructing orthonormal vectors from the original basis vectors:

- Start by normalizing the first vector to create .

- For each subsequent vector , subtract its projection onto the subspace spanned by the previously constructed orthonormal vectors .

- This subtraction removes all components of that lie in the directions of the existing . What remains is a vector orthogonal to all previous .

- Normalize this orthogonal vector to obtain the next orthonormal vector, .

This iterative approach guarantees that the resulting set is orthonormal and spans the same subspace as the original set .

Gram-Schmidt Algorithm

-

Initialize: Normalize the first vector to obtain:

-

Iterate: For :

- Orthogonalize: Subtract the projection of onto the subspace spanned by :

- Normalize: Normalize the resulting orthogonal vector:

- Orthogonalize: Subtract the projection of onto the subspace spanned by :

Proof of Correctness (by Induction)

We will prove by induction that for each , the vectors form an orthonormal basis for .

Base Case ():

-

The vector is defined as:

Since is scaled to have unit length, . -

The span of is equal to because is a scalar multiple of .

Thus, forms an orthonormal basis for .

Inductive Hypothesis:

Assume that form an orthonormal basis for .

Inductive Step ():

We need to show that:

- has unit length.

- is orthogonal to .

- span .

1. has unit length:

By construction, is defined as:

Since is normalized, its length is:

2. is orthogonal to :

To prove this, consider any :

Substitute :

Distribute the dot product:

Since are orthonormal, for , and . This simplifies to:

Since , we have:

Thus, is orthogonal to .

3. span :

By the inductive hypothesis, span . The vector is constructed as a linear combination of and . Since and , it follows that . Hence, .

Because are linearly independent and there are vectors in the -dimensional space , they form a basis for .

Conclusion

The Gram-Schmidt process constructs an orthonormal basis for the span of the input vectors . By iteratively orthogonalizing and normalizing, it ensures that the resulting vectors are orthonormal and span the same subspace.

QR Decomposition

The Gram-Schmidt orthonormalization process naturally gives rise to a valuable matrix factorization called the QR decomposition. This decomposition expresses a matrix as the product of an orthogonal matrix (or a matrix with orthonormal columns) and an upper triangular matrix. It has significant applications in linear algebra computations, including solving least squares problems and finding eigenvalues.

Definition (QR Decomposition):

Let be an matrix with linearly independent columns. The QR decomposition of is given by:

where:

- is an matrix with orthonormal columns (formed by the vectors obtained from the Gram-Schmidt process applied to the columns of ), and

- is an upper triangular matrix.

Lemma (Properties of QR Decomposition):

In the QR decomposition :

- is upper triangular and invertible.

- . This shows that the columns of span the same space as the columns of , i.e., .

Proof of Properties:

1. Upper Triangularity of :

The entries of are given by:

From the Gram-Schmidt process, is orthogonal to because is orthogonal to the subspace spanned by . Therefore, for :

This means is upper triangular.

2. Invertibility of :

The matrix is constructed as an upper triangular matrix with diagonal entries representing the norms of the orthogonal components of the input vectors (after subtracting projections onto the previously computed orthonormal vectors). Specifically:

where .

Key Reason for Non-Zero :

- The columns of are assumed to be linearly independent. This ensures that none of the input vectors is in the span of the previous vectors . Consequently, after subtracting their projections, the resulting orthogonal component is non-zero.

- Since , and , it follows that for all .

Invertibility of :

- is upper triangular by construction, with all diagonal entries .

- A standard result in linear algebra is that an upper triangular matrix is invertible if and only if all its diagonal entries are non-zero.

3. and

We will rewrite the proof using the concept of projection matrices.

Step 1: Projection Matrix for

The projection matrix onto the column space of , denoted as , is given by:

For matrices where has orthonormal columns, we have , where is the identity matrix. Thus:

Step 2: Projection of ‘s Columns onto

Let denote the -th column of . Since (i.e., the columns of span the same space as the columns of ), the projection of onto is the column itself. Formally:

Since , projecting onto leaves it unchanged:

Step 3: Generalization to the Entire Matrix

Applying the above argument to all columns of , we see that the projection of onto is itself:

Step 4: Why ?

The equality implies that the columns of span the same space as the columns of . Specifically:

- The action of maps any vector in to itself, ensuring that .

- Since is constructed using the Gram-Schmidt process on the columns of , the columns of are linear combinations of the columns of , ensuring .

Therefore, , completing the proof.

Example of QR Decomposition

Let

We will compute its QR decomposition.

Step 1: Apply Gram-Schmidt to Columns of

Let and .

-

Normalize :

-

Orthogonalize relative to :

The projection of onto is:

Compute the scalar :

Therefore,

Subtract this projection from to get :

-

Normalize :

where:

Thus:

Step 2: Construct and

The orthonormal vectors and form the columns of :

The matrix is given by :

-

Compute :

-

Compute :

-

Compute :

The matrix is:

Final QR Decomposition

Computational Usefulness of QR Decomposition

1. Projections:

Since , projecting onto is the same as projecting onto . With orthonormal columns in , the projection simplifies to:

2. Least Squares:

The least squares solution to minimizes . Using the QR decomposition (), the normal equations become:

Since is invertible, this simplifies to:

which can be efficiently solved by back-substitution because is upper triangular.

Continue here: 20 Pseudoinverses, Constructing Pseudoinverses