Lecture from 22.11.2024 | Video: Videos ETHZ

The Pseudoinverse

The pseudoinverse, also called the Moore-Penrose Inverse and denoted as , generalizes the matrix inverse for matrices that are not invertible. This includes matrices that are non-square or singular.

Challenges with Inverses

For certain systems, such as , there can be multiple complications:

- The system may have no solution.

- The system may have infinitely many solutions.

- We need an operation that generalizes the matrix inverse to handle such cases, ideally represented as a matrix multiplication.

Plan for Defining the Pseudoinverse

- For full column rank matrices, we define the pseudoinverse.

- For full row rank matrices, we provide a different definition.

- For general matrices, we extend the pseudoinverse definition.

Pseudoinverse for Full Column Rank Matrices

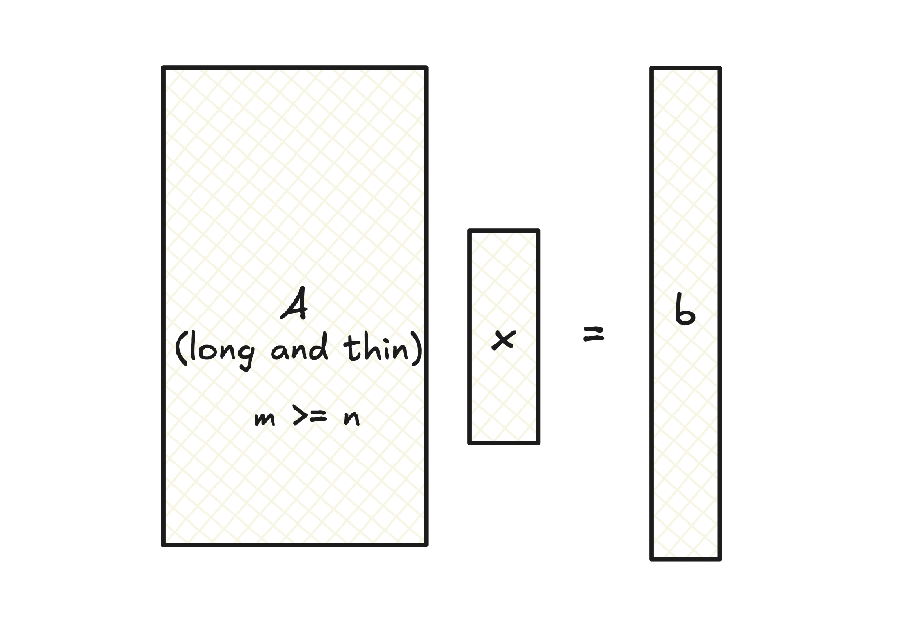

Let’s delve into the concept of the pseudoinverse, a powerful tool in linear algebra that allows us to handle systems of equations, especially overdetermined ones, where we have more equations than unknowns. Think of trying to fit a line to a cloud of data points—there’s likely no single line that goes through all the points, leading to an overdetermined system.

When dealing with a matrix that has full column rank (meaning its columns are linearly independent), we can define a special kind of inverse called the pseudoinverse. This comes in handy when we can’t find a true inverse (e.g., when is not square) but still want to solve equations involving .

Definition

For an matrix with full column rank (rank() = ), the pseudoinverse is defined as:

Notice that is a square matrix, and since has full column rank, is invertible. This invertibility is crucial for the existence of the pseudoinverse.

Proposition

The pseudoinverse acts as a left inverse for :

Proof

The proof is straightforward. Since we’ve established that is invertible, we can simply substitute the definition of :

Example

Let’s solidify this with a concrete example. Take the matrix:

-

Compute :

-

Compute the pseudoinverse:

-

Verify the left inverse property:

Least Squares Solution and Projection

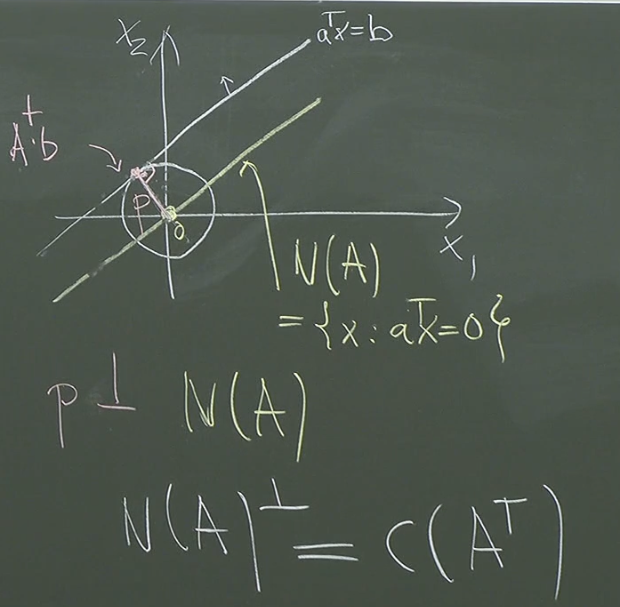

Now, let’s connect the pseudoinverse to the problem of solving , especially when it’s overdetermined. Because has full column rank, we likely won’t find an exact solution. However, we can seek the next best thing: a least squares solution, minimizing the squared error .

We want to project into . The projection is given by for some vector . The error must be orthogonal to . Since , the error must be in , i.e. it must be orthogonal to the column space of . This is expressed by , leading to the normal equation:

We know is invertible since has full column rank, so we can solve for :

Look closely—this is exactly ! So, the pseudoinverse gives us a direct way to compute the least squares solution:

The pseudoinverse efficiently handles the projection step implicit in the least squares solution, finding the vector that minimizes the error . In essence, is the projection of onto the column space of , which is the closest we can get to solving in an overdetermined system.

Pseudoinverse for Full Row Rank Matrices

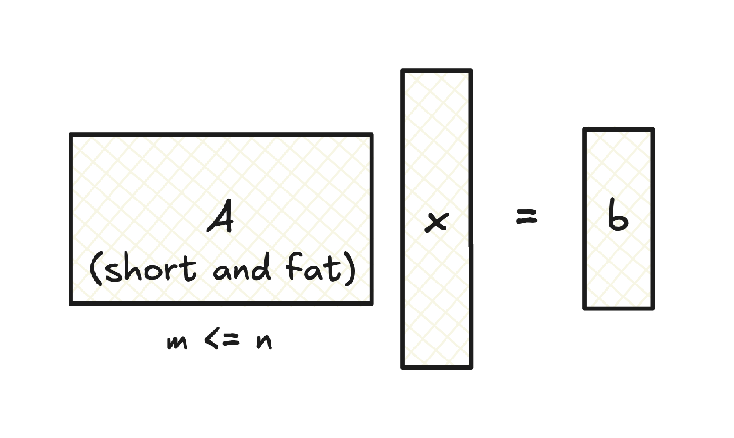

Now, let’s explore the pseudoinverse for matrices with full row rank. This scenario arises when you have more unknowns than equations – an underdetermined system. Imagine having two equations but three unknowns ; there are infinitely many solutions, right? The pseudoinverse helps us find a specific solution among these: the one with the smallest norm (length).

Definition

For an matrix with full row rank (rank() = ), the pseudoinverse is defined as:

In this case, is a square matrix, and since has full row rank, is invertible. This, again, is essential for the pseudoinverse to exist.

Alternative Derivation of the Pseudoinverse for Full Row Rank

Let’s add another perspective on deriving the pseudoinverse for full row rank matrices. We can cleverly leverage what we already know about the pseudoinverse for full column rank matrices.

Recall that if a matrix has full row rank, its transpose has full column rank. So, if is with full row rank (), then is with full column rank ().

We already know how to compute the pseudoinverse of a full column rank matrix. So, we can find the pseudoinverse of :

Now, remember a key property we’ll prove later about pseudoinverses: . This means that the pseudoinverse of the transpose is equal to the transpose of the pseudoinverse.

Using this property, we can find by simply taking the transpose of :

And there we have it! This is precisely the definition of the pseudoinverse for a full row rank matrix. This alternative derivation neatly demonstrates a connection between the full row rank and full column rank cases. It showcases how understanding one case can illuminate others through the use of transposes and their properties.

Proposition

The pseudoinverse acts as a right inverse for :

Proof

Similar to the full column rank case, the proof is a simple substitution:

Example

Let’s illustrate this with an example. Consider the matrix:

-

Compute :

-

Compute the pseudoinverse:

-

Verify the right inverse property:

Minimum-Norm Solution and its Relevance

The pseudoinverse is particularly useful when has infinitely many solutions (e.g., when has full row rank and ). In such cases, it pinpoints the unique solution with the smallest magnitude (the minimum Euclidean norm).

Importance of Minimum-Norm Solutions:

- Efficiency (Engineering): Often corresponds to the least resource-intensive solution.

- Simplicity (Machine Learning, etc.): Represents the simplest explanation among equivalent solutions.

- Stability: More resilient to noise or errors.

Proof of Minimum-Norm Property

Our goal is to prove that is the minimum-norm solution among all solutions to when has full row rank.

1. General Solution

Any solution to can be expressed as:

where is an arbitrary vector.

- Particular Solution: is a particular solution because, for full row rank matrices, . Therefore:

- Nullspace Component: lies in the nullspace of . This is because:

Thus, adding any multiple of a nullspace vector to a particular solution yields another solution.

2. Orthogonality

The key insight is that:

- lies in the row space of (denoted ).

- lies in the nullspace of .

The row space and nullspace are orthogonal complements. This orthogonality is crucial for the next step.

3. Pythagorean Theorem and Minimum Norm

The norm squared of any solution can be written as:

Using the Pythagorean theorem (since the components are orthogonal):

To minimize , we must minimize the second term .

4. Nullspace Component Minimization

The term is minimized when lies entirely in the row space of , making . In this case, the solution simplifies to:

5. The Pseudoinverse Solution

Thus, the pseudoinverse provides the solution with the minimum norm because it eliminates the nullspace component, leaving the solution entirely within the row space of .

Pseudoinverse for General Matrices

CR Decomposition

Any matrix with rank() = can be factored as:

where:

- is an matrix with full column rank (formed by the first linearly independent columns of ), and

- is an matrix with full row rank (formed by the coefficients of in the basis of its column space).

Definition of Pseudoinverse for General Matrices

For a matrix with rank and CR decomposition , the pseudoinverse is defined as:

Alternatively, this can be expressed as:

Lemma (Minimum-Norm Least Squares Solution)

For and , the unique solution to:

is given by:

This result gives the minimum-norm least squares solution.

Proof of Minimum-Norm Least Squares Solution (General Case and Lemma)

This proof addresses both the minimum-norm solution for full row rank matrices and the general lemma concerning the minimum-norm least squares solution using the CR decomposition.

1. The Goal

We aim to prove that for any and , the unique solution to:

is given by . This handles underdetermined (full row rank), overdetermined (full column rank), and general cases.

2. CR Decomposition and Pseudoinverse

Let rank() = . Decompose , where:

- has full column rank (captures the first linearly independent columns of ),

- has full row rank (maps the row space).

The pseudoinverse of is given by , where:

- and .

3. Rewriting the Normal Equations

The constraint is equivalent to solving the least squares problem. Substituting into the equations gives:

This simplifies to:

Because has full row rank, has full column rank, meaning . Thus, we can reduce this to:

4. Expressing the General Solution

Since has full column rank, is invertible. Therefore, we solve:

To find the minimum-norm solution, we consider the general solution form for (from earlier proofs):

where is an arbitrary vector, and . The minimum-norm solution occurs when the nullspace component vanishes:

Substituting and :

This is equivalent to . Thus, is the minimum-norm solution.

5. Connecting to the Full Row Rank Case

When has full row rank (), becomes an invertible matrix. In this case:

- The pseudoinverse simplifies to , consistent with earlier definitions.

- The proof proceeds similarly since is invertible, allowing . This leads to:

Solving, we find:

Following the same steps, we deduce the minimum-norm solution as:

This confirms that yields the same minimum-norm solution.

Summary

This proof uses the CR decomposition and properties of full rank matrices to establish the general minimum-norm least squares solution. It connects to the specific case of full row rank matrices, showing how the pseudoinverse unifies all cases. The solution:

minimizes the error in the least-squares sense while also ensuring the smallest possible norm, addressing both accuracy and efficiency.

Properties of the Pseudoinverse

Theorem

For any matrix , the following properties hold:

- and .

- is symmetric and is the projection matrix onto the column space .

- is symmetric and is the projection matrix onto the row space .

- .

Proof of Properties

-

and :

Using the CR decomposition , we already know . Now, for :

since (for full row rank) and (for full column rank).

-

Projects Onto :

Using the CR decomposition:

Since , is the projection onto . It is symmetric because the pseudoinverse ensures .

-

Projects Onto :

Similarly, using the CR decomposition:

This is symmetric and projects onto , as spans the row space of .

-

:

For full column rank, .

For full row rank, .

For general , we have:

This completes the proof of the properties of the pseudoinverse.

Continue here: 21 Certificates, Linear Systems of Inequalities, Projections of Polyhedra, Farkas Lemma