Lecture from 29.11.2024 | Video: Videos ETHZ

Definition and Properties

Introduction

In this lecture, we delve into the concept of determinants, a fundamental function in linear algebra that assigns a scalar value to a square matrix. Determinants play a crucial role in various applications, including solving systems of linear equations, determining the invertibility of matrices, and calculating volumes of geometric objects.

Intro to determinants…

I genuinely suggest you watch this video (and maybe the next few in the playlist) before reading this or watching the lecture…

The Determinant as a Function over Matrices

The determinant is a function defined specifically for square matrices. If is an matrix (denoted as ), its determinant, denoted as , is a real number.

Geometric Interpretation:

The absolute value of the determinant, , represents the volume of the parallelepiped spanned by the column vectors of the matrix . A parallelepiped is the generalization of a parallelogram to higher dimensions. In 2D, it’s a parallelogram; in 3D, it’s a parallelepiped ; and so on.

More formally, if the columns of are denoted as , the parallelepiped is defined as:

This set describes all possible linear combinations of the column vectors of , where the coefficients are between 0 and 1. The volume of this region is given by . The sign of the determinant indicates the orientation of the parallelepiped.

Fundamental Properties of Determinants

Determinants possess several key properties that are essential for understanding and working with them:

-

Invertibility: A square matrix is invertible (i.e., has an inverse matrix ) if and only if its determinant is non-zero: . This is a crucial connection between the determinant and the solvability of linear systems.

-

Determinant of the Transpose: The determinant of a matrix is equal to the determinant of its transpose: . The transpose of a matrix is obtained by interchanging its rows and columns.

-

Linearity: The determinant is a linear function of each row (or each column) of the matrix when the other rows (or columns) are held fixed. More formally:

Let and be two matrices that are identical except for their -th row. Let be an matrix such that all rows of are the same as the corresponding rows of (and ) except for the -th row where we have:

Then,

This property means that we can “split” a determinant calculation if one row is a sum of two vectors.

-

Multiplicativity: The determinant of the product of two matrices is equal to the product of their determinants: . This property is extremely useful in various proofs and calculations.

These four properties are fundamental to the theory of determinants. We’ll use them to derive further results and computational methods.

2x2 Matrices and Proofs

Determinants of 2x2 Matrices

For a matrix , the determinant is defined as:

This simple formula allows us to easily calculate the determinant of any matrix.

Example:

Lemma:

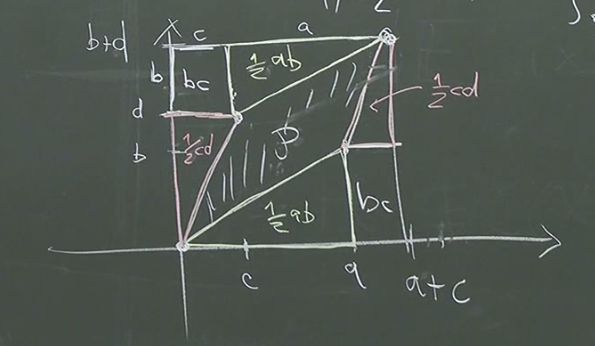

Let . The absolute value of the determinant of is equal to the volume (area in this case) of the parallelogram spanned by the column vectors of . The vertices of the parallelogram are (0,0), (a,b), (c,d), and (a+c, b+d).

We can calculate the area of by finding the area of the large rectangle enclosing the parallelogram and subtracting the areas of the four triangles and two small rectangles: (Note that two of the triangles are congruent, and the other two are also congruent).

Taking the absolute value to account for possible negative orientation:

Thus, the absolute value of the determinant of a matrix represents the area of the parallelogram formed by its column vectors.

Proof of Multiplicativity for 2x2 Matrices

Let’s prove the multiplicativity property () for matrices.

Let and . Then:

Now, let’s calculate the determinant of :

(Notice how the terms and cancel out, as do and ).

We can rearrange this expression:

This proves the multiplicativity property for matrices.

Characterizing Invertibility of 2x2 Matrices using the Determinant

Lemma: A matrix is invertible if and only if .

Proof:

(Forward direction): If is invertible, it has an inverse such that (the identity matrix). Using the multiplicativity property:

Since the product equals 1, neither determinant can be zero. Thus, .

(Reverse direction): Assume . Without loss of generality, let’s assume . (If , then must be non-zero, and we can use a similar argument).

Consider the system of linear equations :

This gives us the following equations:

From the first and third equations, we can solve for x and z (since we assumed ):

Substitute into the second equation () and simplify:

We can find a similar solution for w. The existence of these solutions for , , , and demonstrates that exists, so is invertible.

This completes the proof that a matrix is invertible if and only if its determinant is non-zero. This result will generalize to higher dimensions.

Permutations and Higher Dimensions

Defining Determinants using Permutations…

Once again, I suggest watching this before reading this or watching the lecture…

The Case: Permutations

To define the determinant for general matrices, we need the concept of permutations.

Definition (Permutation): A permutation of the set is a bijective function . In other words, it’s a reordering of the numbers from 1 to . The set of all permutations of elements is denoted by .

Example: For , one possible permutation is , , .

Definition (Sign of a Permutation):

The sign of a permutation , denoted as , is either +1 or -1. It’s determined by the number of inversions in the permutation. An inversion is a pair of elements such that but .

- If the number of inversions is even, .

- If the number of inversions is odd, .

Example:

Let’s find the sign of the permutation where , , , . The pairs with are:

(1, 2), (1, 3), (1, 4), (2, 3), (2, 4), (3, 4)

Comparing the permuted values:

- (1 < 3)

- (1 < 2)

- (1 < 4)

- (3 > 2) - This is an inversion!

- (3 < 4)

- (2 < 4)

There’s only one inversion, so .

Definition of the Determinant for Matrices

Using permutations, we can define the determinant of an matrix as:

This formula calculates the determinant as a sum over all possible permutations of the columns. Each term in the sum is a product of entries from the matrix, one from each row and each column, with the sign determined by the permutation.

Remarks:

-

Multiplicativity of the Sign: For any two permutations and , , where denotes the composition of the two permutations.

-

Equal Number of Positive and Negative Signs: For , exactly half of the permutations in have a sign of +1, and the other half have a sign of -1.

-

Determinant of Permutation Matrices: A permutation matrix is a matrix obtained by permuting the rows (or columns) of the identity matrix. The determinant of a permutation matrix is equal to the sign of the corresponding permutation: , where is the permutation represented by .

-

Determinant of a Matrix: For a matrix , there’s only one permutation (the identity), and its sign is +1. Therefore, .

Determinant of the Transpose and Further Observations

Further Observations

1. Determinants of Matrices Revisited:

Let’s revisit the determinant of a matrix in light of our new definition using permutations. For a matrix , there are two permutations:

- : the identity permutation (, ), with

- : the permutation that swaps the two elements (, ), with

Applying the determinant formula:

This matches our earlier formula: .

2. Determinant of Triangular Matrices:

For a triangular matrix (either upper or lower triangular), the determinant is the product of the diagonal entries.

Let be a triangular matrix. Then:

Proof: In a triangular matrix, all entries below (or above) the main diagonal are zero. When we expand the determinant using permutations, the only non-zero term in the sum will be the product of the diagonal entries, which corresponds to the identity permutation. All other permutations will involve at least one zero entry, making the product zero. The determinant of the identity matrix is 1, since there’s only one permutation and the product of the diagonal elements is 1.

Theorem: Determinant of the Transpose

Theorem: For any square matrix , .

This theorem states a fundamental property: the determinant of a matrix is equal to the determinant of its transpose. The transpose of a matrix is obtained by swapping its rows and columns.

Proof

1. Inverse Permutation

- Definition: For a permutation , its inverse reverses the mapping: if sends to (), then sends back to ().

- Key Property: Equal Signs: The sign of a permutation is the same as the sign of its inverse: .

- Why Equal Signs?

- Applying a permutation and then its inverse gets us back to the original order: , where ‘id’ is the identity permutation (leaving everything in place).

- The identity permutation has no inversions, so its sign is : .

- The sign of a composition of permutations is the product of their signs: .

- Combining these facts: . Since the sign can only be +1 or -1, both and must have the same sign.

2. Determinant of the Transpose

-

Definition of Transpose: Recall that the transpose of a matrix has entries . Rows become columns, and columns become rows.

-

Determinant Formula for the Transpose: Using the definition of the determinant and the property of the transpose, we have:

-

Focus on the Product: The key difference from is the order of indices in the product: instead of .

3. Change of Index (Clever Trick)

-

Relabeling: Let . Then, . As goes from 1 to , so does (because is a bijection—a one-to-one mapping).

-

Rewriting the Product: This relabeling allows us to rewrite the product:

We’ve essentially switched the roles of the row and column indices by using the inverse permutation.

4. Rewriting the Sum (Using the Key Property)

-

Substituting the Sign: We know that . Substituting this into the determinant formula for :

We are summing the same terms as in but in a different order. Since addition is commutative, the sum is unchanged.

5. Same as Determinant of A (Relabeling the Sum)

-

Since we sum over all permutations, summing over is the same as summing over . Let’s just call as :

-

This is now exactly the definition of !

Therefore, .

General Properties and Co-factors

General Properties of the Determinant Operator

We now delve into some crucial properties of the determinant that extend beyond the specific cases we’ve examined so far.

Theorem

-

Invertibility: A matrix is invertible if and only if . We proved this for the case; the proof for the general case is more involved and relies on concepts we’ll develop later. This also means that if , the matrix is singular (not invertible). It indicates that the columns (or rows) of A are linearly dependent, and the matrix does not have full rank.

-

Multiplicativity: For any two matrices , . The proof for the general case requires more advanced techniques beyond the scope of this lecture, but relies on the properties of permutations and matrix multiplication. This property implies that the determinant respects matrix multiplication; the determinant of a product is the product of the determinants. This is crucial for understanding how determinants change under transformations.

-

Determinant of the Inverse: If is invertible (i.e., ), then

This follows directly from the multiplicativity property: , so must be the reciprocal of .

Lemma: Determinant of Orthogonal Matrices

Lemma: If is an orthogonal matrix, then .

Definition: Recall that a square matrix is orthogonal if its transpose is equal to its inverse: . This also implies that . Geometrically, orthogonal transformations preserve lengths and angles.

Proof

-

Determinant of the Identity: The determinant of the identity matrix is 1: . This is because the only non-zero term in the permutation expansion of the determinant is the product of the diagonal elements, which are all 1, associated with the identity permutation (which has a sign of +1).

-

Orthogonality Property: Since is orthogonal, we have .

-

Determinant of the Product: Using the property that the determinant of a product is the product of the determinants:

-

Determinant of the Transpose: We know that . Therefore:

-

Combining Results: Combining the above results, we have:

-

Solving for det(Q): Taking the square root of both sides: This means that can be either +1 or -1.

Therefore, the determinant of an orthogonal matrix is either +1 or -1. This result reflects the fact that orthogonal transformations preserve volume (they don’t stretch or shrink space), but they can potentially change the orientation (hence the possibility of a negative determinant).

Orthogonal matrices with determinant +1 represent rotations, while those with determinant -1 represent reflections or combinations of rotations and reflections.

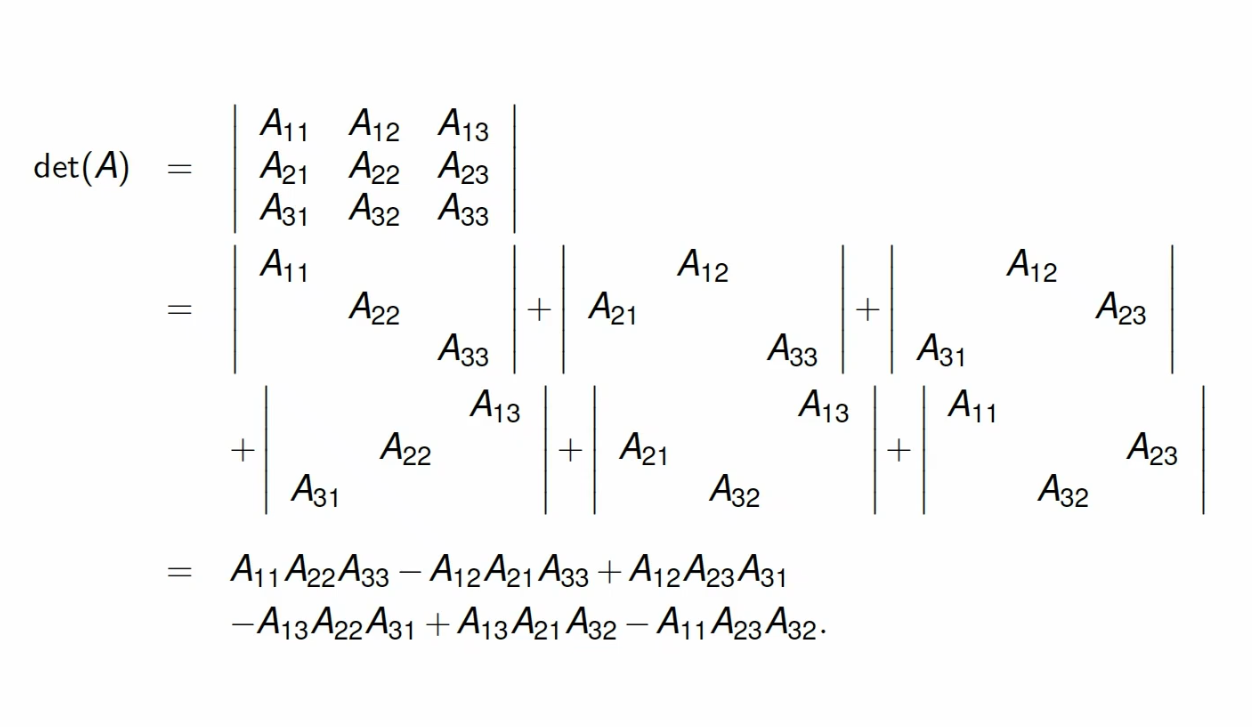

3 x 3 Matrices: Explicit Calculation using Permutations

Let’s apply the determinant definition using permutations to a matrix. This will give us a concrete formula for calculating the determinant in this common case.

A matrix has permutations. Let’s list them and their signs:

| Permutation () | Sign () | Product of Matrix Elements | |||

|---|---|---|---|---|---|

| 1 | 1 | 2 | 3 | +1 | |

| 2 | 1 | 3 | 2 | -1 | |

| 3 | 2 | 1 | 3 | -1 | |

| 4 | 2 | 3 | 1 | +1 | |

| 5 | 3 | 1 | 2 | +1 | |

| 6 | 3 | 2 | 1 | -1 |

Now, applying the determinant formula:

We get:

This formula can be remembered using the “Sarrus’ rule” or the “Rule of Sarrus,” a visual mnemonic device:

A11 A12 A13 A11 A12

A21 A22 A23 A21 A22

A31 A32 A33 A31 A32

// Diagonals going down to the right are positive:

+ A11*A22*A33 + A12*A23*A31 + A13*A21*A32

// Diagonals going down to the left are negative:

- A13*A22*A31 - A11*A23*A32 - A12*A21*A33

While Sarrus’ rule is handy for matrices, it doesn’t generalize to higher dimensions. The general formula using permutations is the fundamental definition for all matrices.

This 3x3 example provides a concrete illustration of how the permutation-based definition works in practice. It shows how to enumerate the permutations and calculate the corresponding products with the correct signs to compute the determinant.

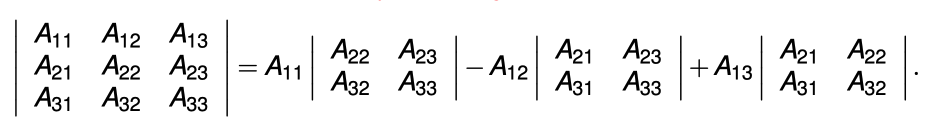

Co-factors

Definition (Co-factor):

Let . For each , let denote the matrix obtained by deleting the -th row and -th column of . The cofactor of is defined as:

The cofactor is a signed determinant of a smaller matrix, and the sign is determined by the position of the element in the original matrix. The factor creates a checkerboard pattern of positive and negative signs.

Lemma (Laplace Expansion): The determinant of a matrix can be computed by expanding along any row or column using cofactors. Expansion along row : Expansion along column :

This lemma provides a recursive way to calculate determinants by reducing the problem to calculating determinants of smaller matrices.

Lemma: Adjugate Matrix and Inverse

Definition: Adjugate Matrix The adjugate matrix of a matrix is the transpose of the cofactor matrix : , so that the entry of is .

Lemma: Given a matrix with , let be the matrix of cofactors of . Then: In other words, we have: This formula provides an explicit way to calculate the inverse of a matrix using its cofactors. The proof utilizes the Laplace expansion and properties of the cofactor matrix which are beyond the scope of these notes.

Cramer’s Rule, Linearity, and Example

Cramer’s Rule: A Formula for Linear Systems

Cramer’s Rule provides a method for solving systems of linear equations using determinants.

Theorem (Cramer’s Rule):

Let be an invertible matrix (i.e., ), and let . Then the solution to the linear system is given by:

where is the matrix obtained by replacing the -th column of with the vector .

Example (n = 3):

Consider the system :

To find , we replace the first column of with :

Then, . Similarly, we can find and by replacing the second and third columns of with , respectively.

Proof Idea: Cramer’s rule follows from the formula for the inverse of a matrix using the adjugate matrix and the fact that . Consider the entry of the solution vector given by: . Using the expression for the inverse in terms of the adjugate, we have: This is exactly the formula for using the Laplace expansion along the column.

Linearity of the Determinant

Lemma: The determinant is a linear function of each row (or column). For any scalars and vectors :

The same holds for any column. This property was stated earlier; it follows directly from the definition of the determinant as a sum over permutations since each term in the sum will contain exactly one element from each row and each column.